Today AMD formally revealed the next-generation Radeon GPUs powered by the RDNA 2 architecture and it looks like they’re going to thoroughly give NVIDIA a run for your money.

What was announced: Radeon RX 6900 XT, Radeon RX 6800 XT, Radeon 6800 with the Radeon RX 6800 XT looking like a very capable GPU that sits right next to NVIDIA's 3080 while seeming to use less power. All three of them will support Ray Tracing as expected with AMD adding a "high performance, fixed-function Ray Accelerator engine to each compute unit". However, we're still waiting on The Khronos Group to formally announce the proper release of the vendor-neutral Ray Tracing extensions for Vulkan which still aren't finished (provisional since March 2020) so for now DirectX RT was all they mentioned.

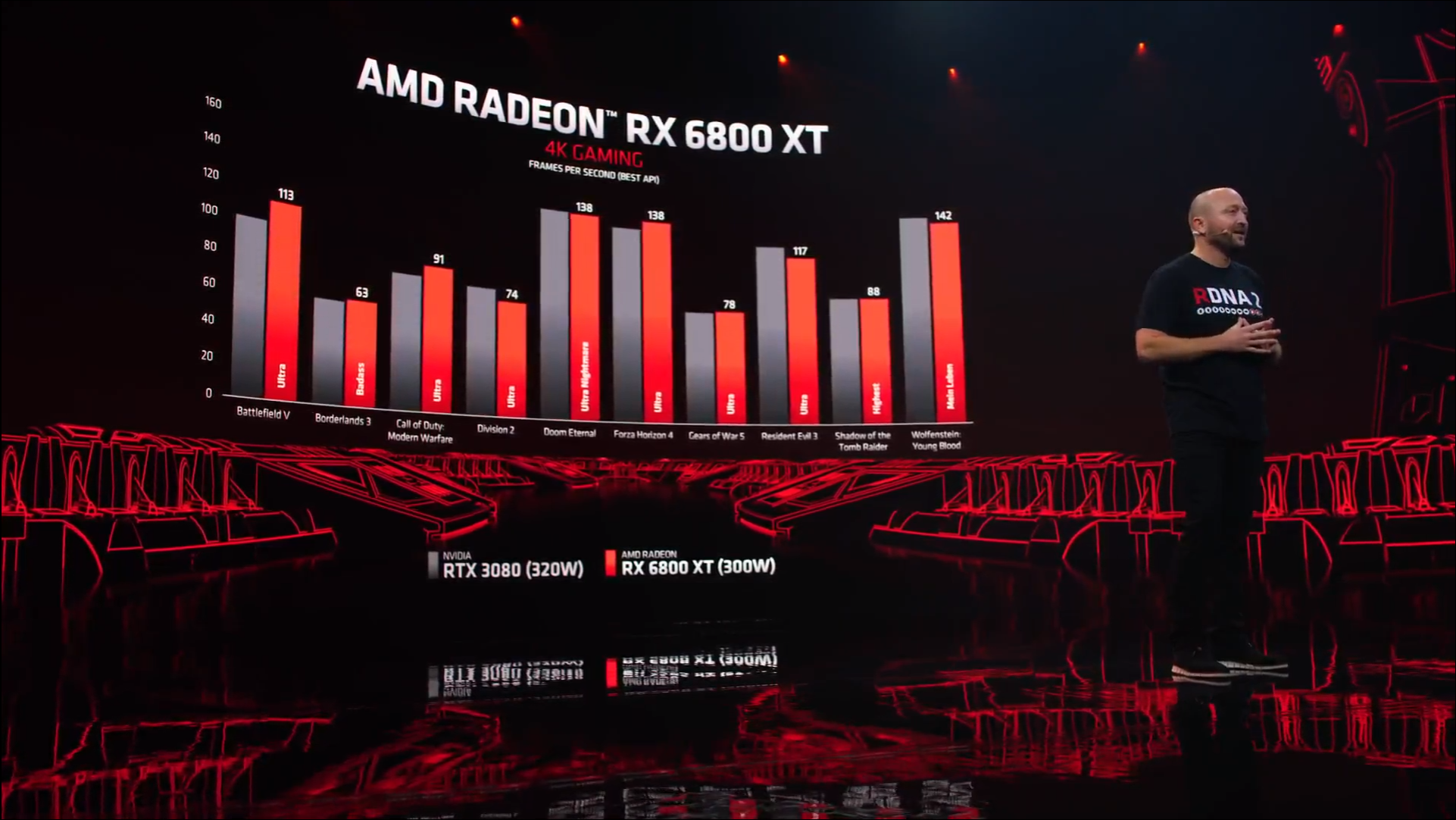

Part of the big improvement in RDNA 2 comes from what they learned with Zen 3 and their new "Infinity Cache", which is a high-performance, last-level data cache they say "dramatically" reduces latency and power consumption while delivering higher performance than previous designs. You can see some of the benchmarks they showed in the image below:

As always, it's worth waiting on independent benchmarks for the full picture as both AMD and NVIDIA like to cherry-pick what makes them look good of course.

Here's the key highlight specifications:

| RX 6900 XT | RX 6800 XT | RX 6800 | |

|---|---|---|---|

| Compute Units | 80 | 72 | 60 |

| Process | TSMC 7nm | TSMC 7nm | TSMC 7nm |

| Game clock (MHz) | 2,015 | 2,015 | 1,815 |

| Boost clock (MHz) | 2,250 | 2,250 | 2,105 |

| Infinity Cache (MB) | 128 | 128 | 128 |

| Memory | 16GB GDDR6 | 16GB GDDR6 | 16GB GDDR6 |

| TDP (Watt) | 300 | 300 | 250 |

| Price (USD) | $999 | $649 | $579 |

| Available | 08/12/2020 | 18/11/2020 | 18/11/2020 |

You shouldn't need to go buying a new case either, as AMD say they had easy upgrades in mind as they built these new GPUs for "standard chassis" with a length of 267mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650W-750W power supplies.

There was a big portion of the event dedicated to DirectX which doesn’t mean much for us, but what we’ve been able to learn from the benchmarks shown is that they’re powerful cards and they appear to fight even NVIDIA’s latest high end consumer GPUs like the GeForce 3080. So not only are AMD leaping over Intel with the Ryzen 5000, they’re also now shutting NVIDIA out in the cold too. Incredible to see how far AMD has surged in the last few years. This is what NVIDIA and Intel have needed, some strong competition.

How will their Linux support be? You're probably looking at around the likes of Ubuntu 21.04 next April (or comparable distro updates) to see reasonable out-of-the-box support, thanks to newer Mesa drivers and an updated Linux Kernel but we will know a lot more once they actually release and can be tested.

As for what’s next? AMD confirmed that RDNA3 is well into the design stage, with a release expected before the end of 2022 for GPUs powered by RDNA3.

You can view the full event video in our YouTube embed below:

Direct Link

Feel free to comment as you watch as if you have JavaScript enabled it won't refresh the page.

Additionally if you missed it, AMD also recently announced (October 27) that they will be acquiring chip designer Xilinx.

Quote meCool.

The only detail I'm currently interested in though is WHEN THE FECKING rdna1 PRICES WILL GO DOWN

On an entirely selfish level, hopefully not before I sell my Red Devil

I'll buy a AMD card when they have working Linux drivers available at launch.I think you should quantify this statement to: you will buy AMD card *at launch* when the Linux drivers will be available *at launch*. Otherwise, it doesn't make much sense.

I'm not waiting six months for the Mesa guy to get their new cards working when Nvidia has Linux drivers available for their new card on day one. I'm not supporting a company that treats my OS of choice like a second class citizens.You got it backwards - NVIDIA releases drivers at launch day because they don't care - if the driver is broken, they will release the fixed version after some time, perhaps, maybe. Right now NVIDIA driver is broken for kernel 5.9 for example.

AMD does things correctly - starting work on supporting new GPUs in kernel early and improving it over time - support for RX 6000 series landed already in kernel 5.9 (released ~3 weeks ago) and Mesa 20.2 (released ~2 weeks ago). We can't say how good the support will be until the cards start showing up, but AMD seems to be gradually improving the process of working with open source. Also, RDNA2 is a refresh of RDNA, not a whole new architecture.

BTW, if you use Ubuntu LTS, then AMD releases closed source version of their drivers to be used until the open source version is provided via LTS point release. So this way you *have* an option of using your GPU at launch.

I'll buy a AMD card when they have working Linux drivers available at launch. I'm not waiting six months for the Mesa guy to get their new cards working when Nvidia has Linux drivers available for their new card on day one. I'm not supporting a company that treats my OS of choice like a second class citizens.In the defense of AMD devs, for each generation of GPU and CPU they are getting closer and closer to be ready at launch time. For instance, here, and if I'm not mistaken, Big Navi are supported in Mesa latest code (though not yet perfectly AFAIK). Now we're just missing the Mesa and Linux releases.

Personally, I don't really mind not having these GPUs ready at launch time since they are too expensive for me, but it means that the previous generation prices should go down a little bit! 😉

I doubt much companies really need day 1 releases though, as most companies won't on the latest GPUs as they just got out of the oven. For instance, I don't think Google Stadia is really interested right now about being able to get their hands on the latest GPUs, even though their servers runs on AMD (which is also a proof that even in a private company, open source apparently matters too, otherwise they would have chosen NVIDIA which have better performance/dollar ratio).

Which makes me think Microsoft and Sony are also using AMD GPUs in their consoles, so apparently it's not that bad 😉

Last edited by Creak on 29 October 2020 at 9:59 pm UTC

The 850 should handle the power draw of the 6900XT just fine

Why do people think they need a 850W PSU for a 300W card?

Because it's always better to have more than you need than to need and not have. The 3090RTX is supposed to be a 350w card. According to LTT it can pull almost 500w and caused them noticeable problems on their test system because it only had an 850w PSU. Now do you think I trust AMD's power rating? Do you? If so, why would you do that?

Last edited by jarhead_h on 30 October 2020 at 6:18 am UTC

I always had a NVIDIA card, but now that I am on Linux, AMD all the way and I am very pleased with my 5700 XT and my 580 before that. Runs like a dream and I get the sweet new goodies like ACO first.

Will be a while before I need a new PC, but seems it is time to start saving again so in 3 years I can do a big upgrade again.

!https://www.gamingonlinux.com/render_chart.php?id=920&type=stats

$ echo -e "AMD: $((152+130+59+52+50))\nNvidia: $((114+112+83+75+61))"

AMD: 443

Nvidia: 445Of course I'm only looking at a single source that is most certainly biased, and even then only at the top ten graphics cards, and in October of 2020, the "majority" is indeed still green.

I will probably fall out of that top ten some time early next year–depending on how fast RDNA2 can make their inroads–, as I'm considering upgrading from my current 'pole position' RX580 to an RX6800 (non-XT) that probably won't even see the top twenty for the first half of next year at least... once the initial hype is over and prices hopefully come down a little. USD 580 (EUR 500 at current exchange rates) likely means a store price of EUR 650 or possibly even more after duty and taxes, which means its actual cost is closer to about USD 750-ish, and that is something I am definitely not willing to spend on any graphics card.

Analogous for "board power"–I can't say I'm comfortable with 250W, that's about 20% more than what I'd like to stay below. However, the RX580 is still holding up most of the time even when throttled to about 50-75%, so I'd hope to see the higher efficiency of RDNA2 make a definitive dent in the power curve. Summer temperatures the last couple of years meant no power hungry games for a few months; and the choice of a 40°C room, or only playing tetris for a while, is another thing I'm not comfortable with.

I'm definitely curious to see the idle power draw; the RX580 reports 33W at the minimum, which I find ridiculous to say the least, and my only hope is that it's in fact a display error from hwinf... although it really doesn't look like it, the whole system draws 70W on idle, where I'd reasonably expect maybe 40 at best, to no more than say 50 worst-case.

Last edited by Valck on 30 October 2020 at 9:41 am UTC

Because it's always better to have more than you need than to need and not have. The 3090RTX is supposed to be a 350w card. According to LTT it can pull almost 500w and caused them noticeable problems on their test system because it only had an 850w PSU. Now do you think I trust AMD's power rating? Do you? If so, why would you do that?

Bare in mind that LTT tests were made with a CPU with a high power consumption as well (the GPU by itself went slightly over 450W peaks). So, a 850w may not be enough for such configuration because you don't have enough current in the 12v line for both components but this is an extreme configuration.

IMO, going very high in PSU wattage is not always the best for efficiency as your computer will probably by idling more than 50% of the time (or more than 90% if you keep it always on... like me) and you will probably get better efficiency with a certificated PSU that has a wattage more in line with your total system consumption.

Last edited by x_wing on 30 October 2020 at 1:48 pm UTC

Don't quite get what your gripe with ATI/AMD GPUs exactly is. They've announced themselves as a competitive GPU maker with the Radeon 7-thousand-something (or was it 9k-something?) back in the day. And been only getting better. Is it not enough to earn your 'trust' (whatever that means)?Let's not forget that until this generation, AMD was miles behind Nvidia in terms of performanceAs if AMD's GPU's clearly suck compared to NVIDIA's value-wise.Please consider supporting AMD.

I don't really mind proprietary games, but as a Linux user

I clearly want my computer infrastructure being as open as possible.

Hardware, drivers and libs.

This is where AMD shines, if you're smart enough to value this.

Or just be a conscious customer and evaluate products properly instead of relying on ideologies only.

No they don't, and the diff between them is mostly ideological to begin with. The latter abhors public software model, the former at least partially supports it. So the ideology starts with the vendor in this case, rather than with the consumer.

Not to mention that you're basically beta (or sometimes it's better said alpha lol) testing their drivers for the first few months of use until they actually get to a point that they are ok.

Now don't get me wrong, I hope AMD does become a viable alternative and fierce opponent to Nvidia, but they cannot win my trust overnight. It will be a while until that. I can't trust them in the GPU space just as I cannot trust Intel in the CPU space. They have a long history of mistakes, bad products and laziness (including over-rebranding old products).

I do expect them to make it right and heal their reputation, but until then, I will wait.

And no, I'm not an Nvidia fanboy at all. I just want to go with the product that has the best chance of working well and offers the performance I need. Just as I used to choose Intel over AMD for CPUs in the past (for similar reasons) and eventually the situation made a complete switch to the point I'd avoid Intel like the plague, this could happen also in the GPU space but it's not yet the case.

As for the 'sins' you've mentioned, hasn't NVIDIA been up to roughly the same stuff?

IMO, going very high in PSU wattage is not always the best for efficiency as your computer will probably by idling more than 50% of the time (or more than 90% if you keep it always on... like me) and you will probably get better efficiency with a certificated PSU that has a wattage more in line with your total system consumption.

I got 750W one and so far it was enough. You can get good quality efficient PSU to reduce power wasting:

https://seasonic.com/prime-ultra-titanium

Looks like I'm going with the Sapphire 6800XT all the way.

Time for some GPU Porn: https://phonemantra.com/gigabyte-and-sapphire-unveil-reference-radeon-rx-6800-and-rx-6800-xt/

Looks like I'm going with the Sapphire 6800XT all the way.

I wonder how custom designs from Sapphire will look like. Reference design is already on the level of what they used to make as custom before.

Last edited by Shmerl on 30 October 2020 at 6:17 pm UTC

I never had to write xorg.conf to make my hardware work, nvidia-settings takes care of the settings.

You said it: “nvidia-settings takes care of the settings”. You're experiencing Linux graphics like if you still lived in year 2004. That's not normal you have to use nvidia-settings. That's wrong you have to use it. Neither Intel or AMD hardware requires similar things. This has stopped on AMD side many year ago. The thing is: Nvidia is decade late in the race.

To defend lunix (as someone who recently defected from green to red, so pls no bully), the nvidia-settings control panel *is* pretty nice. It offers what few Linux config options exist in a nice GUI, with a multimonitor positioning/resolution/Hz section to boot (something some DEs' settings panels may fall short of). With AMD, I have no control panel. :c

Also, and brace yourselves, but G-SYNC works out of the box in Linux. FreeSync doesn't, and it can barely be said to work *at all* with its "only-up-to-90 Hz" limitation. (The downside is that G-SYNC monitor wouldn't work at all without Nvidia proprietary drivers, or an AMD card, so I ditched it along with my 1080 Ti. Hehe.)

It's hard to know what the facts are. (Nature is like this. No, Nature is actually like this.) Past arguments about the facts, it's all philosophy. (If Nature is unjust, change Nature! No, I'm not interested in justice; only efficacy.) But then choice of philosophy can circle back around to fact problems. (Can Nature be changed at all? Will such changes result in a juster world?) But then those can be addressed again by philosophy. (I don't care; it's worth it to die trying; an unjust world is not worth living in. I do care; to struggle in futility is folly.)

What I'm trying to say is BIG NAVIIII HYPE WOOOOOO!!!!!!11

Congrats AMD!

I got myself a 5700XT in early January.

Experience was (still is) absolutely flawless.

Worked out-of-the-box.

Performance is great for playing games in FHD and WQHD high settings.

I can't tell anything about release time but, indeed, I heard it was *rough*. :)

Indeed it was. My 5700 has been chugging along for the past few months without any problems and hiccups. And everything was good - until my 5500XT (in a second machine) met kernel 5.8.13 and later. It will disable a second display with any kernel > 5.8.12. No way to activate the second display.

The recipe for problem seems to be the mixture of 2+ displays (resolutions don't matter, one display won't cut it), RDNA and amdgpu. The bugtracker on freedesktop.org is still being fed with rather bizarre bugs.

Hmm. I have a WQHD monitor attached along with a UHD TV.

No issues at all. Never had.

Also, and brace yourselves, but G-SYNC works out of the box in Linux. FreeSync doesn't, and it can barely be said to work *at all* with its "only-up-to-90 Hz" limitation.

Never had any problems with adaptive sync. I'm using LG 27GL850. Adaptive sync is fine up to 144 Hz and LFC works fine as well.

Gsync can't work out of the box unless your monitor has some proprietary module in it. That's an instant no go for me.

Last edited by Shmerl on 31 October 2020 at 11:42 pm UTC

If Cyberpunk 2077 was coming to Linux, I would be looking at that RX 6800 instead of a PS5.

If it's not, it will work with vkd3d-proton eventually. But I'll probably wait until it's discounted. RX 6800 or RX 6800XT will still be quite useful for it.

Last edited by Shmerl on 1 November 2020 at 12:09 am UTC

I had the same thoughts, but that's a lot of money to put toward something that will probably work. Cyberpunk is a special game to me. If there are launch issues, I want to be on a majority platform and not a niche one. Since I'm buying the disc, I can always sell and buy the Steam version later on.If Cyberpunk 2077 was coming to Linux, I would be looking at that RX 6800 instead of a PS5.

If it's not, it will work with vkd3d-proton eventually. But I'll probably wait until it's discounted. RX 6800 or RX 6800XT will still be quite useful for it.

I had the same thoughts, but that's a lot of money to put toward something that will probably work. Cyberpunk is a special game to me. If there are launch issues, I want to be on a majority platform and not a niche one. Since I'm buying the disc, I can always sell and buy the Steam version later on.

On one hand I don't mind waiting until it's confirmed to be working. On the other hand I don't mind reporting bugs to vkd3d and Wine about what's not working. The only annoying thing would be paying full price when CDPR are ignoring Linux gamers. That's why I'd rather wait until it's discounted or someone gives me a key.

Also, and brace yourselves, but G-SYNC works out of the box in Linux. FreeSync doesn't, and it can barely be said to work *at all* with its "only-up-to-90 Hz" limitation.

Never had any problems with adaptive sync. I'm using LG 27GL850. Adaptive sync is fine up to 144 Hz and LFC works fine as well.

MSI MAG272CQR (165 Hz) here, and I haven't gotten it to work. I still see tearing with v-sync off, and I never did with my G-SYNC monitor.

From the Arch wiki,

Freesync monitors usually have a limited range for VRR that are much lower than their max refresh rate.

...

Although tearing is much less noticeable at higher refresh rates, FreeSync monitors often have a limited range for their VRR of 90Hz, which can be much lower than their max refresh rate.

Supposedly, it only works at 90 Hz? Few Linux games are demanding enough to run that low, though, so I haven't been able to test.

And, from amd.com:

For FreeSync to work in OpenGL applications, V-Sync must be turned ON.(lol! In this case, my "testing" is moot since, beyond no tearing with v-sync off, I have no idea what adaptive sync is or does, sorry.)

FreeSync enable setting does not retain after display hotplug or system restart (e.g., need to manually re-enable FreeSync via terminal command)(Err...

DISPLAY=:0 xrandr --output DisplayPort-# --set "freesync" 1Ok, lemme just add that to my startup script--)

In multi-display configurations, FreeSync will NOT be engaged (even if both FreeSync displays are identical)(--oh, nevermind. Maybe this is why I can't get it to work? I have multiple monitors.)

MSI MAG272CQR (165 Hz) here, and I haven't gotten it to work. I still see tearing with v-sync off, and I never did with my G-SYNC monitor.

Did you enable it for Xorg? Make sure that option is enabled. On Debian it's in /usr/share/X11/xorg.conf.d/10-amdgpu.conf

Option "VariableRefresh" "true"For FreeSync to work in OpenGL applications, V-Sync must be turned ON.

I don't think that's right. You need to always keep vsync off.

Multiple monitors is always a mess under X. Try first with one.

Last edited by Shmerl on 1 November 2020 at 8:35 pm UTC

See more from me