I have now spent two weeks using the open source Nouveau driver on my desktop and I have some results here. Let's see how well Nouveau did for me!

Let's start by talking a bit about a thing called GPU reclocking. By default your GPU will most likely be running at a low clockrate to conserve power and prevent unnecessary heat build-up. Only when the GPU power is really needed, it will get reclocked to a higher power level by the GPU driver. For the longest time this was a problem with the Nouveau driver as it suffered from a lack of GPU reclocking, leaving your GPU to the lowest possible power level each and every time. So it's not surprising that Nouveau gained the reputation of being a very under-performing driver.

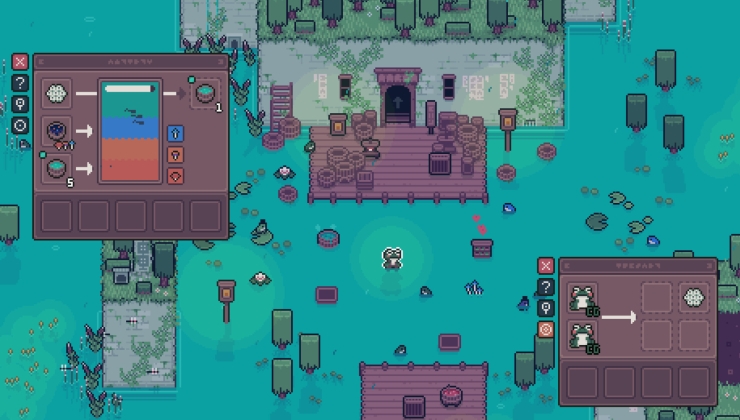

Work has been done to fix this issue recently and on some cards you can access higher power levels. This is done manually by echoing values into /sys/class/drm/card0/device/pstate. Not really all that intuitive but I was able to reclock my GPU. However, this functionality is still very experimental and attempting to reach the two highest power levels turned my screen into pixel salad.

![]()

Luckily I had some reclocking capability and I was able to push my cores up to around 79% of the maximum clock rate. In the case of my GTX 760 that means 966 MHz out of 1228 MHz. Memory clockrate wasn't too good and the 0a power level I had access to only yielded 1620 MHz out of the 6008 MHz maximum, which naturally affects the performance in some games.

But regardless of these reclocking limitations, performance with most games has been surprisingly good. When I last tried Nouveau the clockrates were limited to the BIOS values which are too low to run most 3D games at a reasonable framerate. Now I get over twice as much power out of my GPU and the results reflect that.

A surprising amount of games work just fine with Nouveau, some of them even being big titles. Naturally you are limited to games that run on OpenGL 3.3, so Metro Redux and Bioshock Infinite are out of the question but the majority of games out there still use GL3. So these past two weeks, even if I haven't gotten to test out Bioshock Infinite, haven't been completely devoid of games. My gaming has mostly consisted of Mount & Blade: Warband and Cities: Skylines but, like I promised at the beginning of the experiment, I also widened my horizons just a bit and tried to play as many games as possible.

In basic usage Nouveau mostly worked flawlessly. One annoying thing has been this weird vertical purple line on the left side of my monitor. It's only a pixel or two wide, so it doesn't really negatively affect my screen space but it was a bit distracting at the beginning. I barely even notice it now but it's a thing I'd like to get rid of. It also seems like the image quality took a hit when I switched from the blob and everything seems a bit blurrier. I'm not quite sure what is the cause for that but luckily the difference is barely noticeable and I forgot about it in two days. Some software can also make Nouveau act a bit wonky. For example viewing a video in Flash (ugh) in a browser and then switching to another workspace while the video is playing seems to somehow mess with the screen buffer and make all kinds of weird things happen on the screen. I ended up switching to HTML5 video on YouTube with Firefox due to this problem. Same thing happened with SimpleScreenRecorder when I attempted to capture a certain region of the screen but fullscreen recording worked fine. The area I was recording also looked normal. Maybe someone with more knowledge can explain what might be happening with that. OBS was also a dead end and only seemed to output weird, distorted green video even when the preview looked fine. So video production was a bit tricky at times on Nouveau.

Okay, let's talk about something that might interest you more than my video production worries. Let's see how games worked on Nouveau.

I have to say, I was positively surprised by the performance of various games. Games like Mount & Blade: Warband and Xonotic worked absolutely fantastic with average framerate of 60 fps and beyond. I also had plenty of success with Source titles, including Counter-Strike: Global Offensive which also ran at solid 60 FPS. Valve certainly has optimized their games well. The only Source game that wouldn't cooperate was Left 4 Dead 2 which for some reason complained about glGetError. As far as I know my card should support the necessary spec even on Nouveau, so it could be a bug either in Nouveau or in L4D2. I also tried Dota 2 and after some slight tweaking it too ran at 60 FPS. Sadly my testing with it was limited by my abilities so I couldn't do extensive testing with it.

Note: the following CS:GO video was captured at 720p but that was to compensate for the recording. The game actually ran at 60 FPS at 1080p.

https://www.youtube.com/watch?v=3vo26bzWPlo

https://www.youtube.com/watch?v=H3pZkgHTg0U

Those games are nice, but surely you'll want to see something more. So let's bring in the big guns. Borderlands 2 and The Pre-Sequel were games you wished I would test, so I downloaded them both. The results were a bit mixed. TPS ran at a solid 60 FPS but it crashed only after a couple of minutes of play. BL2 on the other hand ran at a lower framerate, around 30 FPS, but seemed more stable. To my understanding they should run on the same engine so I'm left puzzled why one is more stable than the other. The performance difference is easier to explain, since BL2 has more going on in its world than on the barren moon of TPS.

The following video was captured at 720p with everything set to low.

https://www.youtube.com/watch?v=bazxhfnd8qY

When you think of a graphically intensive game, what comes to mind? Well, some people would say Metro: Last Light and that's what I decided to test. Due to the missing GL4 components in Nouveau I had to go with the original Metro port, but that's better than nothing. The results were surprising. Not only did Metro: Last Light run but it actually ran pretty well. It's most certainly not solid 60 FPS all the time but without recording it did manage to mostly stay above 30 FPS. The more open areas and large amounts of fire did have a pretty big impact on the framerate but I'd consider it playable if not smooth. I also tried some Unreal Tournament pre-alpha. Not the smoothest experience ever but once again playable.

https://www.youtube.com/watch?v=EXSMFjT-mA8

https://www.youtube.com/watch?v=y76zJOCNCLU

You also wanted me to find out how well Unity3D games worked. Here the results were a bit mixed. Interstellar Marines, unsurprisingly, ran at 20-30 FPS and Kerbal Space Program didn't do much better. Wasteland 2 also had trouble reaching 30 FPS. Not all Unity3D games performed badly though. Ziggurat, one of my favourite Unity3D games, ran at a respectable 40-60 FPS at 1080p and at a pretty much solid 60 FPS in 720p. Hand of Fate also ran at an acceptable 30-40 FPS.

The experiment wasn't just sunshine and rainbows though. Let's take a look at the disaster department.

While most games would run, some stubbornly crashed and burned either after just a couple of minutes of gameplay or immediately after launching them. The Feral ports were especially unlucky. XCOM: Enemy Unknown would crash immediately when you entered combat and Empire: Total War was even worse. When you started up Empire it would first turn everything on your screen red and then lock up your GPU entirely, making your computer hang. Sometimes the OS managed to recover from the situation by restarting Xorg, sometimes it would just hang until you forced a reboot. Same thing happened with Talos Principle and War Thunder. These GPU lockups were probably the biggest problem I encountered, since they made everything crash, not just the game and recovering from them was time consuming and annoying.

Luckily they didn't happen just randomly. They consistently only happened when certain games were run or when you tried to do something a bit “exotic”. I found a way to trigger it by streaming a game from my desktop to my laptop using Steam's In-Home Streaming and then trying to alt-tab on my desktop. If I let the host be, In-Home Streaming itself worked flawlessly.

In conclusion, Nouveau isn't perfect but it most certainly isn't unusable. A hardcore gamer probably wouldn't find Nouveau to be all that useful but for someone into more casual games and possibly an interest in software ethics it could be just powerful enough. Though someone like that probably would rather use an AMD card with a lot more complete open source driver. It's also not the most newbie-friendly, since you have to navigate quite deep into the system folders to even reclock your GPU. Once that process is automated and you can reliably access the highest possibly power states, I can see Nouveau becoming a viable alternative to the blob even for the less-technical people.

This experiment was a pretty fun one and I enjoyed testing all kinds of stuff with Nouveau. I imagine some of you might also want to test Nouveau to compare your experience to mine but a word of warning before you wipe that blob of yours: GPU support in Nouveau is limited. Especially if you are on one of those new Maxwell cards (GTX 750 + 900 series) you might find that you completely lack graphics and the reclocking only seems to work on Kepler hardware. So if you have a 600 or 700 series card you might be able to test it out. No promises, I'm no Nouveau dev.

Hopefully you found this experiment interesting. I will definitely keep an eye out for Nouveau's progress and probably test it out a lot more frequently than I've done so far. Have you tested Nouveau recently? What have your experiences been with it?

I hope you know that your 760GTX has GDDR5 memory, so the maximum of 6008 MHz is actually 1502 MHz, because you need to multiply it with 4. So if you tried to run your memory at a frequency of 1620 MHz, that's 6480 MHz, probably to much. I hope you didn't mix up these facts and that this comment is useless but I thought I'll just share this information.I'm going by what NVIDIA X Server Settings and pstate say and according to them the maximum memory frequency I can achieve on GTX 760 is 6008 MHz. I am no hardware expert and I don't know the exact hardware details, but my point still stands: with Nouveau I can only run the memory at a little over a quarter of the maximum speed.

but my point still stands: with Nouveau I can only run the memory at a little over a quarter of the maximum speed.

That statement is false. That is not how memory works. If GDDR5 is marketed at 6008MHz or actually Nvidia says 6,0Gb/ps, the base speed is not 6,0Gb/ps. [Source](http://www.nvidia.com/gtx-700-graphics-cards/gtx-760/)

It means that the memory chips run at a CK of 1502MHz and WCK of 3004MHz. Making it effectively 6Gb/ps data rate per pin. Which is the way the marketing department decided to name it. But anytime you want to change the speed of the GPU/memory, you need to take in account the CK speed. That's the base speed the memory is running on.

Configuring the CK speed above 1502MHz is overclocking and will eventually result in artifacts. [Example](https://www.google.nl/search?q=artifacts+gpu&client=ubuntu&hs=hZ&tbm=isch&tbo=u&source=univ&sa=X&ei=TjQgVZ-XIsqaNvP3g6AK&ved=0CCEQsAQ&biw=650&bih=653)

The screen you posted in the article are also artifacts, which could have produced by overclocked memory.

Being more precise, the GDDR5 SGRAM uses a total of three clocks: two write clocks associated with two bytes (WCK01 and WCK23) and a single command clock (CK). Taking a GDDR5 with 5 Gb/s data rate per pin as an example, the CK clock runs with 1.25 GHz and both WCK clocks at 2.5 GHz.[Source](http://en.wikipedia.org/wiki/GDDR5)

but my point still stands: with Nouveau I can only run the memory at a little over a quarter of the maximum speed.

That statement is false. That is not how memory works. If GDDR5 is marketed at 6008MHz or actually Nvidia says 6,0Gb/ps, the base speed is not 6,0Gb/ps. Source

It means that the memory chips run at a CK of 1502MHz and WCK of 3004MHz. Making it effectively 6Gb/ps data rate per pin. Which is the way the marketing department decided to name it. But anytime you want to change the speed of the GPU/memory, you need to take in account the CK speed. That's the base speed the memory is running on.

Configuring the CK speed above 1502MHz is overclocking and will eventually result in artifacts. Example

The screen you posted in the article are also artifacts, which could have produced by overclocked memory.

Being more precise, the GDDR5 SGRAM uses a total of three clocks: two write clocks associated with two bytes (WCK01 and WCK23) and a single command clock (CK). Taking a GDDR5 with 5 Gb/s data rate per pin as an example, the CK clock runs with 1.25 GHz and both WCK clocks at 2.5 GHz.Source

Okay, let's put it simply then.

Powerstate 0 (or 07): Graphics: 405 MHz, Memory: 648 MHz

Powerstate 1 (or 0a): Graphics: 967 MHz, Memory: 1620 MHz

Powerstate 2 (or 0e/0f): Graphics: 1228 MHz, Memory: 6008 MHz.

Only powestates 0 and 1 are usable on Nouveau. Thus, the memory can't reach its maximum, which NVIDIA X Server Settings says is 6008 MHz. I am not sure how that clockspeed is counted in the end, but surely 6008 MHz > 1620 MHz. If 1502 MHz were the absolute maximum, then why would NVIDIA allow me to go over that and go over it quite frequently when I'm playing a game?

Just for reference, I'm pretty sure this is where Samsai gets his numbers:

I've got a GTX 760 as well, and this screenie shows the relevant section of NVIDIA X Server Settings.

How to set, change and reset your SteamOS / Steam Deck desktop sudo password

How to set, change and reset your SteamOS / Steam Deck desktop sudo password How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

See more from me