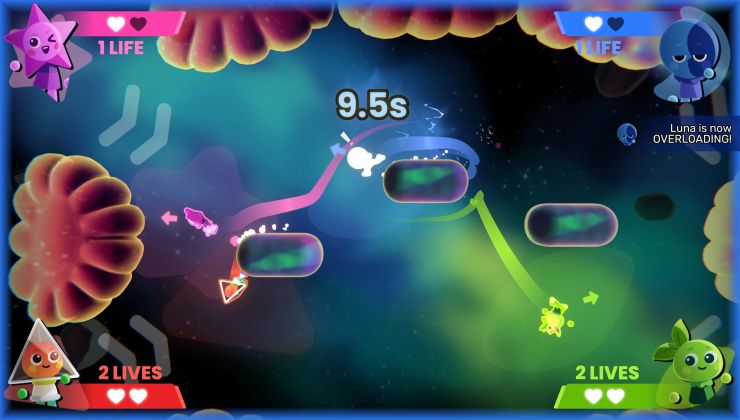

I'm really excited to try Magicka 2, as I thought the first one was quite fun. The developer has been keeping everyone up to date on progress in their Steam forum. Sadly, for AMD GPU users it looks like it won't run as well as on Nvidia hardware.

A developer wrote this on their official Steam forum:

QuoteWe've had discussions on how to support non-XInput controllers and we found that just using SDLs input subsystem would probably solve that. Since there are controller emulators already we consider it a 'would be nice' feature.

I took the liberty of replacing the Nvidia 660GTX in my Linux machine for our Radeon 270X and ran some tests. On Ubuntu I tested both the open source drivers and fglrx and both worked fine. I think the open source drivers have slightly better performance. Switching drivers around kinda broke my setup though so I installed Debian 8 and did some tests there and only had issues with decals getting a slight rectangular outline.

Overall the biggest issue in my tests with AMD cards is that the performance on Linux & MacOSX feels like it's halved compared to a corresponding Nvidia card. I've added graphics settings to control how many decals/trees/foliage the game draws that helps a bit but it would've been better if this was not necessary.

It's fantastic to see them actually implement something to help with the issue though, and I'm sure many AMD GPU users will be pretty happy about that. It's not all doom and gloom, since that developer mentioned it will even work on the open source driver for AMD users, so that's great too. They must be one of a very few developers who are testing their port so thoroughly, it's quite refreshing to see.

I just want to get my hands on it already!

Some you may have missed, popular articles from the last month:

Quoting: alexWhy? Well because it's impossible. That's why. Just like all this pseudo-programming bullshitObviously it is impossible as proven beyond any doubt by your most enlightening anecdote. If they don't detect every programming mistake or lost optimization opportunity imaginable, surely it is impossible to hack around anything at all. The rest of us are simply talking out of our asses. Glad that's settled then.

3 Likes, Who?

At least I have some real examples and not just unconfirmed bullshit. You are just rehashing things you have heard from others, but since you don't understand the topic you just spew out tons of bullshit. You might have some things more or less correct, but the way you explain it just marks you with this gigantic neon lit sign "incompetent".

The mirv example was well documented and if this is true then yes thats a good and specific example. But when you explain in terms of " magic" and such it's just completely obvious you dont know anything about software development.

The mirv example was well documented and if this is true then yes thats a good and specific example. But when you explain in terms of " magic" and such it's just completely obvious you dont know anything about software development.

0 Likes

What "real" examples (you have) are you referring to?

1 Likes, Who?

Quoting: alexThe mirv example was well documented and if this is true then yes thats a good and specific example. But when you explain in terms of " magic" and such it's just completely obvious you dont know anything about software development.Damn. Busted. I wonder how our software design business lasted for ten years before anyone found out I have no idea what I'm doing. Please don't tell our clients. :'(

2 Likes, Who?

Quoting: tuubiQuoting: alexThe mirv example was well documented and if this is true then yes thats a good and specific example. But when you explain in terms of " magic" and such it's just completely obvious you dont know anything about software development.Damn. Busted. I wonder how our software design business lasted for ten years before anyone found out I have no idea what I'm doing. Please don't tell our clients. :'(

So you're working for Microsoft? ( ͡° ͜ʖ ͡°)

0 Likes

The restrict pointer example is a real world example of how limited optimization is. We are basically discussing compiler optimization passes now. And this is not something I'm going to do on a random forum filled with ppl who never wrote a single line of OpenGL yet are experts on the Nvidia driver.

If the driver was this magical optimizer that fixes bad behavior automagically then why are ppl discussing "approaching zero driver o erhead" a lot now?

http://gdcvault.com/play/1020791/

See this talk, you will se that in order to fix bad behavior you simply dont use Nvidia - you rewrite the code! (Which ofc, would be blatantly clear if you knew anything about the limitations a compiler faces)

If the driver was this magical optimizer that fixes bad behavior automagically then why are ppl discussing "approaching zero driver o erhead" a lot now?

http://gdcvault.com/play/1020791/

See this talk, you will se that in order to fix bad behavior you simply dont use Nvidia - you rewrite the code! (Which ofc, would be blatantly clear if you knew anything about the limitations a compiler faces)

0 Likes

Quoting: tuubiQuoting: alexThe mirv example was well documented and if this is true then yes thats a good and specific example. But when you explain in terms of " magic" and such it's just completely obvious you dont know anything about software development.Damn. Busted. I wonder how our software design business lasted for ten years before anyone found out I have no idea what I'm doing. Please don't tell our clients. :'(

So you write OpenGL there? No? Right.

Language? Hello kitty script?

1 Likes, Who?

But that wasn't the example "you have", was it? And why does it show, how limited optimization is? We're talking about things from like simple type conversions to replacing whole shaders. You just provided a for-loop which doesn't tell us anything.

In general, you're not doing much more then keep telling us, how stupid we all are. Other than insults, you didn't contribute much so far.

In how far is this different from what I suggested in the first place?

Last edited by alexThunder on 15 October 2015 at 10:28 pm UTC

In general, you're not doing much more then keep telling us, how stupid we all are. Other than insults, you didn't contribute much so far.

Quoting: alexSee this talk, you will se that in order to fix bad behavior you simply dont use Nvidia - you rewrite the code! (Which ofc, would be blatantly clear if you knew anything about the limitations a compiler faces)

In how far is this different from what I suggested in the first place?

Last edited by alexThunder on 15 October 2015 at 10:28 pm UTC

1 Likes, Who?

Whatever man, this whole thing is retarded. Impossible to get through when you dont know shit.

The loop was an extremely simple counter argument shpwing that given a very simple code, the compiler cannot magically optimize things. You need to manually mark things. This is a counter argument to this whole "Nvidia compiler automagically fixes everything". " you can write shitty code and it gets optimized boom bang yeah!". Yet back in reality land, the compiler cannot even figure out the for loop could run much faster if marked restrict.

The loop was an extremely simple counter argument shpwing that given a very simple code, the compiler cannot magically optimize things. You need to manually mark things. This is a counter argument to this whole "Nvidia compiler automagically fixes everything". " you can write shitty code and it gets optimized boom bang yeah!". Yet back in reality land, the compiler cannot even figure out the for loop could run much faster if marked restrict.

0 Likes

And how does this example show, that compilers don't do any optimizations elsewhere?

(Yes, it's a trap)

Last edited by alexThunder on 15 October 2015 at 10:42 pm UTC

(Yes, it's a trap)

Last edited by alexThunder on 15 October 2015 at 10:42 pm UTC

1 Likes, Who?

See more from me