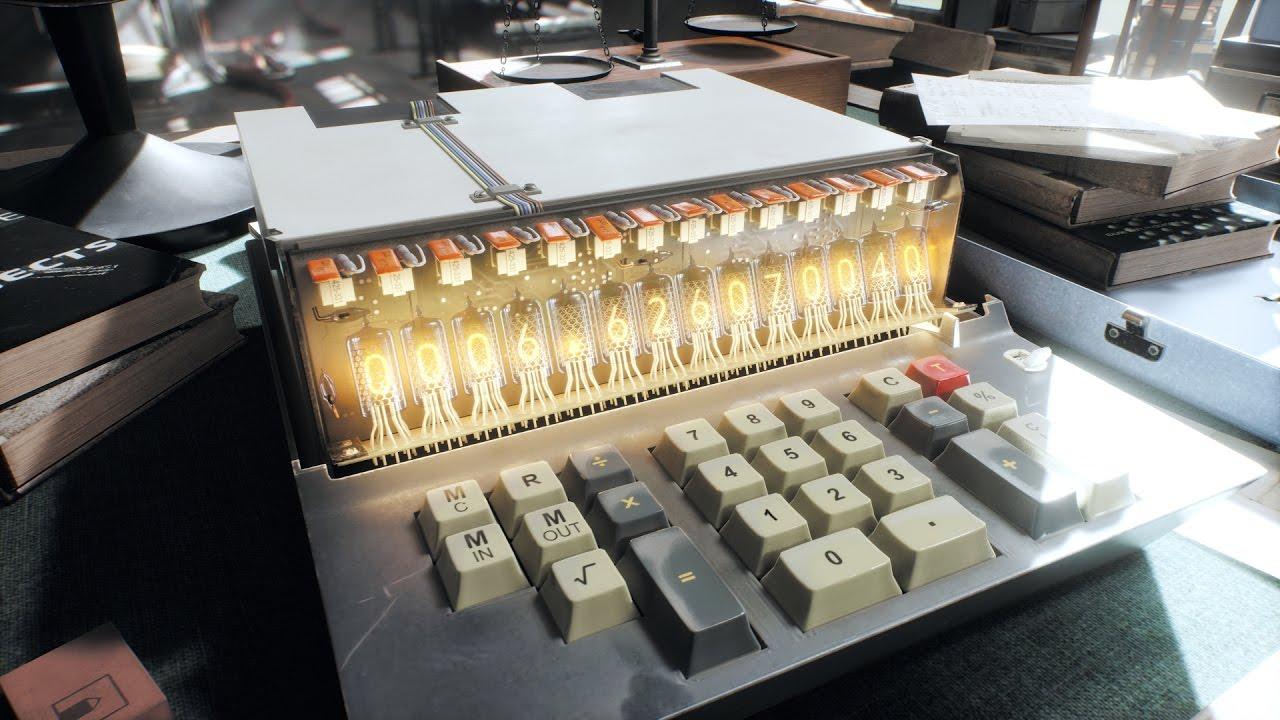

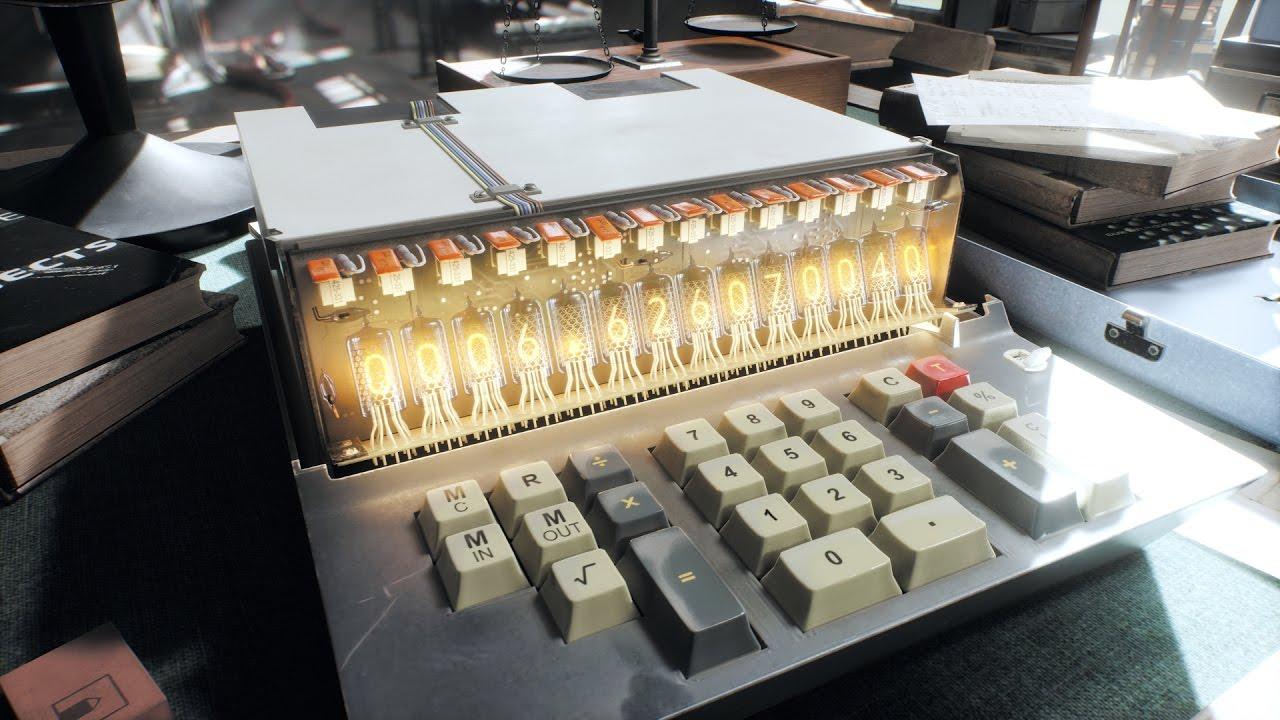

The newest benchmarking test by Unigine Corp shows off their UNIGINE 2 engine and rather impressive visuals. Get ready to push your GPUs hard!

Having run the Superposition benchmark, I can confirm that it’s indeed a very demanding test. You can run the program in either a benchmark mode that automatically runs through a series of tests or engage it in game mode. In game mode you are free to play around with the physics, objects and lighting and get a real feel about what exactly is being rendered. Either way, it all looks pretty darn good. At the end of the benchmark, the program spits out a score which you can use to compare your results with other people.

Though the Linux version of the benchmark includes a VR-ready test, actual VR support for headsets is currently not implemented. The choice of renderer is limited as well, with OpenGL being the only option available on our platform. Vulkan support would have been nice to have and hopefully it’ll be added in a future update. But given this tweet, it doesn’t seem like a certain thing.

The benchmark itself seems to have good multi-threaded support, with its workload being spread out evenly across all my cores. The only issue I ran into in testing thus far is that it doesn't fully detect all my VRAM, only seeing 3GB out of 8GB on my RX 480. But the test itself had no problem using more than the detected amount when I cranked up the settings. The actual stress test is an advanced feature not covered in the free version so you'll have to buy a license to access it or use some of the other features, such as uploading results to a leaderboard.

You try Superposition out for yourself by downloading it from here.

I sense that this is one of those benchmarks people will keep coming back to when comparing GPUs. So, are you impressed? What are your results like? Let us know in the comments!

Thanks pete910 for the heads up

YouTube videos require cookies, you must accept their cookies to view. View cookie preferences.

Direct Link

Direct Link

Having run the Superposition benchmark, I can confirm that it’s indeed a very demanding test. You can run the program in either a benchmark mode that automatically runs through a series of tests or engage it in game mode. In game mode you are free to play around with the physics, objects and lighting and get a real feel about what exactly is being rendered. Either way, it all looks pretty darn good. At the end of the benchmark, the program spits out a score which you can use to compare your results with other people.

Though the Linux version of the benchmark includes a VR-ready test, actual VR support for headsets is currently not implemented. The choice of renderer is limited as well, with OpenGL being the only option available on our platform. Vulkan support would have been nice to have and hopefully it’ll be added in a future update. But given this tweet, it doesn’t seem like a certain thing.

The benchmark itself seems to have good multi-threaded support, with its workload being spread out evenly across all my cores. The only issue I ran into in testing thus far is that it doesn't fully detect all my VRAM, only seeing 3GB out of 8GB on my RX 480. But the test itself had no problem using more than the detected amount when I cranked up the settings. The actual stress test is an advanced feature not covered in the free version so you'll have to buy a license to access it or use some of the other features, such as uploading results to a leaderboard.

You try Superposition out for yourself by downloading it from here.

I sense that this is one of those benchmarks people will keep coming back to when comparing GPUs. So, are you impressed? What are your results like? Let us know in the comments!

Thanks pete910 for the heads up

Some you may have missed, popular articles from the last month:

All posts need to follow our rules. For users logged in: please hit the Report Flag icon on any post that breaks the rules or contains illegal / harmful content. Guest readers can email us for any issues.

"vulkan is not mature enough for production use yet"

Surely it will be if no one uses it... Maybe this attitude is why they can't sell a single engine license.

Surely it will be if no one uses it... Maybe this attitude is why they can't sell a single engine license.

1 Likes, Who?

"vulkan is not mature enough for production use yet"Well they obviously haven't seen what Feral & Croteam are up to with Vulkan.

Surely it will be if no one uses it... Maybe this attitude is why they can't sell a single engine license.

5 Likes, Who?

Well they obviously haven't seen what Feral & Croteam are up to with Vulkan.

To be fair, they haven't. Well, nowadays they might have, but that tweet was made half a year ago, back when Croteam was still facing Vulkan driver issues with The Talos Principle and long before the Mad Max update happened.

That said, I'm pretty sure Doom had Vulkan support patched in sometime before the tweet, and that worked out rather well...

0 Likes

I missed how old the tweet was, it's probably too early for me to be messing about on the internet. ;)

1 Likes, Who?

Sadly they do not support Vulkan, would really like to see a good Vulkan Benchmark for Linux.

Just ran it yesterday evening on 1080p extreme preset. My machine got only 1100-somthing score, whereas HexDSLs machine (nearly exact same hardware, same gpu, same driver) got over 1500. Only difference so far was, he uses Antergos and I still use Ubuntu 16.04. So maybe this was another coffin nail for Ubuntu.

Just ran it yesterday evening on 1080p extreme preset. My machine got only 1100-somthing score, whereas HexDSLs machine (nearly exact same hardware, same gpu, same driver) got over 1500. Only difference so far was, he uses Antergos and I still use Ubuntu 16.04. So maybe this was another coffin nail for Ubuntu.

0 Likes

See I am helpful :P

It is brutal on GPU's tbh, also the openGL implementation is nowhere near the perf of DX from the scores I've seen.

Edit:

@BTRE

I've done a bench thread in the forum here [https://www.gamingonlinux.com/forum/topic/2683](https://www.gamingonlinux.com/forum/topic/2683)

Last edited by pete910 on 12 Apr 2017 at 10:08 am UTC

It is brutal on GPU's tbh, also the openGL implementation is nowhere near the perf of DX from the scores I've seen.

Edit:

@BTRE

I've done a bench thread in the forum here [https://www.gamingonlinux.com/forum/topic/2683](https://www.gamingonlinux.com/forum/topic/2683)

Last edited by pete910 on 12 Apr 2017 at 10:08 am UTC

1 Likes, Who?

It is brutal on GPU's tbh, also the openGL implementation is nowhere near the perf of DX from the scores I've seen.

From what I can find the Win10/DX11 results are very close to the Linux/OpenGL looking at Average FPS:

Windows 10 DirextX (1080ti - Extreme setting - 46 FPS):

http://www.guru3d.com/articles-pages/unigine-superposition-performance-benchmarks,2.html

Linux OpenGL: (1080ti - Extreme setting - 44.5 FPS)

http://www.phoronix.com/scan.php?page=article&item=unigine-super-benchmark&num=3

0 Likes

"The only issue I ran into in testing thus far is that it doesn't fully detect all my VRAM, only seeing 3GB out of 8GB on my RX 480." I am glade it was not myself as well with this issue. To be honest had a small panic that I got the RX480 4gb card instead. I ran Doom again and it told me 8gb and with this made me feel a little better. I upgrade to 4.11 RC6 and got a score of 1472. It would have been nice to see a Vulkan API there since I think would have been better.

With Doom I found out that OpenGL is a big burden on your cpu compared to Vulkan that is much less of one. I really do hope that Unigine puts Vulkan API in down the road would be nice.

With Doom I found out that OpenGL is a big burden on your cpu compared to Vulkan that is much less of one. I really do hope that Unigine puts Vulkan API in down the road would be nice.

0 Likes

Sadly they do not support Vulkan, would really like to see a good Vulkan Benchmark for Linux.Well you did have a different CPU, not sure if the Intel Xeon E3-1231 is comparable in speed with the Intel Core-i7-3770 at the same clock.

Just ran it yesterday evening on 1080p extreme preset. My machine got only 1100-somthing score, whereas HexDSLs machine (nearly exact same hardware, same gpu, same driver) got over 1500. Only difference so far was, he uses Antergos and I still use Ubuntu 16.04. So maybe this was another coffin nail for Ubuntu.

There is not much detailed information about the hardware, the MB RAM speed, Craphicscard RAM speed, PCIe speed etc could be different as much other things that could have an impact.

It sure would be interesting to see different distributions compared on the exact same hardware, and similar software that could impact the speed, like the kernel, graphics driver, compositor etc.

I do use Ubuntu 16.10, I might install some other distributions on free partitions, especially if someone else shows a big difference that can not be changed without changing distribution:-)

0 Likes

It is brutal on GPU's tbh, also the openGL implementation is nowhere near the perf of DX from the scores I've seen.

From what I can find the Win10/DX11 results are very close to the Linux/OpenGL looking at Average FPS:

Windows 10 DirextX (1080ti - Extreme setting - 46 FPS):

http://www.guru3d.com/articles-pages/unigine-superposition-performance-benchmarks,2.html

Linux OpenGL: (1080ti - Extreme setting - 44.5 FPS)

http://www.phoronix.com/scan.php?page=article&item=unigine-super-benchmark&num=3

Take his benches with a bucket of salt, Why on earth he cant just use the actual score that the bench produces is beyond me.

1080's are averaging 4500 in windows. mine is 3225, so like I said, not even close.

Need to see the score as to determine how Phoronix and everybody elses stacks up, Showing the average is only part of the benchmark final score

0 Likes

Sadly they do not support Vulkan, would really like to see a good Vulkan Benchmark for Linux.I doubt it has anything to do with your choice of distro. Every benchmark I was able to find shows that Ubuntu and Antergos have essentially identical performance. No doubt your issue lies elsewhere.

Just ran it yesterday evening on 1080p extreme preset. My machine got only 1100-somthing score, whereas HexDSLs machine (nearly exact same hardware, same gpu, same driver) got over 1500. Only difference so far was, he uses Antergos and I still use Ubuntu 16.04. So maybe this was another coffin nail for Ubuntu.

0 Likes

I know this is old but I started to play around with this benchmark today.

Run some tests today. Looks nice. My benchmark results are lower bottom compared with the leaderboard though. I can hardly find tests with my GPU type and OpenGL (and especially not with my CPU). Sidenote: It has zero impact whether I overclock my CPU hard for this test or not. Guess my bottleneck is PCIe 2.0.. well anyway..

Unsatisfied with the provided filters on the leaderboard I fired up my developer console and watched the traffic. Oh.. this is an React app with json communication. Interesting. Looking closer at the json data I find undisplayed information like

* user.avatarUrlx4

* vulkanVersion

* sliMode

* crossfireMode

* otherGPUs

* graphicsApi

The query itself looks ugly af since it's basically GET parameters with values that are JSON but urlencoded from a String with many spaces resulting in plents of pointless garbage (like %20 for the empty char). Why someone would do this is beyond me. Stripping any useless %20 gives us a way better overview. urldecoding the whole thing now gives a clear picture.

Example of an original urldecoded and cleaned request:

Hm.. looks like the server side filter works with some sort of typical REST pattern. I feel reminded to SequelizeJS and Loopback. Let's inject to the results the 'chipVendorId' that the onpage search filter suggests when I type "NVIDIA". For this I converted the where parameter to a json (for comfort), added my String chipVendorId to it's Array and urlencoded the whole thing again. Bingo. The API returns my request _including_ the otherwhise ommited chipVendorId so I guess we're free to manipulate the query as it pleases us.

Let's see what the id for OpenGL is. There are some entries for Linux Mint and Ubuntu. Wild guess at this point: graphicsApi changes between 1 (DirectX) to 2 (OpenGL). Mayhap Vulkan will be 3 (atm it gives internal server error).

Onwards to the search query. It's url parameter is where and the important object is result. First I tried appending graphicsApi but the server doesn't seem to like more than _3_ options for result. Since graphicsApi is a child of result judging by the examined reply JSONs I need it exactly here so I sacrificed the option activeGpusCount and replaced it with graphicsApi. The object for where looks now like this:

"preset" is btw just "PreSet", one of the options in the performance type dropdown. It looks like this:

More fun: When "preset" is removed completly from the query I get _all_ results (with default limit 50 - please be nice and request in batches of said 50 using skip and limit parameters or the server may outright ban your IP *cough*)

So forging my query gave me a total of 42 results with OpenGL. For those who care (the reply should be JSON - simply do the following curl request (fiddle with the parameter at will) and view the result opengl-users.json in some JSON parser/viewer (there are some online) to get a better overview:

The parameters look decoded like this (and have to be urlencoded again before requesting them as application/json!):

The interesting thing: Not all of these are Linux. In fact some report Windows. Mayhap some Wine users? Some quick and dirty stats using sort and uniq in the following spoiler. Yes, there are more GPUs then users. I removed that GPU count filter above and some have SLI or whatever:

This is seriously lacking some AMD CPUs! Anyway. I got some data to compare and I'm happy now :) Feel free to play with this, too. For the lazy.. https://pastebin.com/1zHSJKCR

Disclaimer: This is not hacking. It's a public, keyless (but undocumented) API. (I didn't even try the other announced but probably mean Access-Control-Methods: POST, DELETE, PATCH).

Run some tests today. Looks nice. My benchmark results are lower bottom compared with the leaderboard though. I can hardly find tests with my GPU type and OpenGL (and especially not with my CPU). Sidenote: It has zero impact whether I overclock my CPU hard for this test or not. Guess my bottleneck is PCIe 2.0.. well anyway..

Unsatisfied with the provided filters on the leaderboard I fired up my developer console and watched the traffic. Oh.. this is an React app with json communication. Interesting. Looking closer at the json data I find undisplayed information like

* user.avatarUrlx4

* vulkanVersion

* sliMode

* crossfireMode

* otherGPUs

* graphicsApi

The query itself looks ugly af since it's basically GET parameters with values that are JSON but urlencoded from a String with many spaces resulting in plents of pointless garbage (like %20 for the empty char). Why someone would do this is beyond me. Stripping any useless %20 gives us a way better overview. urldecoding the whole thing now gives a clear picture.

Example of an original urldecoded and cleaned request:

Spoiler, click me

https://api-benchmark.unigine.com/v1/benchmark/results?select={"result":["id","user","cpus","activeGpus","activeGpusCount","score","motherboard","os","graphicsApi","memoryTotalAmount","fpsMin","fpsMax","fpsAvg","leaderboardPosition","added","preset"],"cpus":["vendor","name","baseClock","maxClock","physicalCoresCount","logicalCoresCount"],"activeGpus":["chipVendor","chipName","chipCodename","deviceVendor","deviceName","driver","driverVersion","baseGpuClock","baseMemoryClock","vram","temperatureMin","temperatureMax","gpuClockMin","gpuClockMax","memoryClockMin","memoryClockMax","utilizationMin","utilizationMax"]}&where={"result":[["isGpusCountBestScore","eq",true],["preset","eq",2],["activeGpusCount","eq",1]]}&sort={"result":{"fpsAvg":-1}}&limit=50&skip=0Hm.. looks like the server side filter works with some sort of typical REST pattern. I feel reminded to SequelizeJS and Loopback. Let's inject to the results the 'chipVendorId' that the onpage search filter suggests when I type "NVIDIA". For this I converted the where parameter to a json (for comfort), added my String chipVendorId to it's Array and urlencoded the whole thing again. Bingo. The API returns my request _including_ the otherwhise ommited chipVendorId so I guess we're free to manipulate the query as it pleases us.

Let's see what the id for OpenGL is. There are some entries for Linux Mint and Ubuntu. Wild guess at this point: graphicsApi changes between 1 (DirectX) to 2 (OpenGL). Mayhap Vulkan will be 3 (atm it gives internal server error).

Onwards to the search query. It's url parameter is where and the important object is result. First I tried appending graphicsApi but the server doesn't seem to like more than _3_ options for result. Since graphicsApi is a child of result judging by the examined reply JSONs I need it exactly here so I sacrificed the option activeGpusCount and replaced it with graphicsApi. The object for where looks now like this:

"result":[["isGpusCountBestScore","eq",true],["preset","eq",2],["graphicsApi","eq",2]]"preset" is btw just "PreSet", one of the options in the performance type dropdown. It looks like this:

<select class="filter-preset" name="preset">

<option value="1">720p Low</option>

<option value="2">1080p Medium</option>

<option value="3">1080p High</option>

<option value="4">1080p Extreme</option>

<option value="5">4K Optimized</option>

<option value="6">8K Optimized</option>

</select>More fun: When "preset" is removed completly from the query I get _all_ results (with default limit 50 - please be nice and request in batches of said 50 using skip and limit parameters or the server may outright ban your IP *cough*)

So forging my query gave me a total of 42 results with OpenGL. For those who care (the reply should be JSON - simply do the following curl request (fiddle with the parameter at will) and view the result opengl-users.json in some JSON parser/viewer (there are some online) to get a better overview:

curl 'https://api-benchmark.unigine.com/v1/benchmark/results?select=%0A%7B%0A%22result%22%3A%5B%22id%22%2C%22user%22%2C%22cpus%22%2C%22activeGpus%22%2C%22activeGpusCount%22%2C%22score%22%2C%22motherboard%22%2C%22os%22%2C%22graphicsApi%22%2C%22memoryTotalAmount%22%2C%22fpsMin%22%2C%22fpsMax%22%2C%22fpsAvg%22%2C%22leaderboardPosition%22%2C%22added%22%2C%22preset%22%5D%2C%22cpus%22%3A%5B%22vendor%22%2C%22name%22%2C%22baseClock%22%2C%22maxClock%22%2C%22physicalCoresCount%22%2C%22logicalCoresCount%22%5D%2C%22activeGpus%22%3A%5B%22chipVendorId%22%2C+%22chipVendor%22%2C%22chipName%22%2C%22chipCodename%22%2C%22deviceVendor%22%2C%22deviceName%22%2C%22driver%22%2C%22driverVersion%22%2C%22baseGpuClock%22%2C%22baseMemoryClock%22%2C%22vram%22%2C%22temperatureMin%22%2C%22temperatureMax%22%2C%22gpuClockMin%22%2C%22gpuClockMax%22%2C%22memoryClockMin%22%2C%22memoryClockMax%22%2C%22utilizationMin%22%2C%22utilizationMax%22%5D%0D%0A%7D&where=%7B%0A%22result%22%3A%5B%5B%22isGpusCountBestScore%22%2C%22eq%22%2Ctrue%5D%2C%5B%22graphicsApi%22%2C%22eq%22%2C2%5D%5D%7D&sort=%7B%0A%22result%22%3A%7B%22fpsAvg%22%3A-1%7D%0A%7D&limit=50,&skip=0' -H 'Pragma: no-cache' -H 'Origin: https://benchmark.unigine.com' -H 'Accept-Encoding: gzip, deflate, sdch, br' -H 'Accept-Language: de-DE,de;q=0.8,en-US;q=0.6,en;q=0.4' -H 'User-Agent: Mozilla/5.0 (X11; Fedora; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' -H 'Accept: application/json, text/plain, */*' -H 'Cache-Control: no-cache' -H 'Connection: keep-alive' -H 'DNT: 1' --compressed > opengl-users.jsonThe parameters look decoded like this (and have to be urlencoded again before requesting them as application/json!):

?select={"result":["id","user","cpus","activeGpus","activeGpusCount","score","motherboard","os","graphicsApi","memoryTotalAmount","fpsMin","fpsMax","fpsAvg","leaderboardPosition","added","preset"],"cpus":["vendor","name","baseClock","maxClock","physicalCoresCount","logicalCoresCount"],"activeGpus":["chipVendorId", "chipVendor","chipName","chipCodename","deviceVendor","deviceName","driver","driverVersion","baseGpuClock","baseMemoryClock","vram","temperatureMin","temperatureMax","gpuClockMin","gpuClockMax","memoryClockMin","memoryClockMax","utilizationMin","utilizationMax"]}&where={"result":[["isGpusCountBestScore","eq",true],["graphicsApi","eq",2]]}&sort={"result":{"fpsAvg":-1}}&limit=50,&skip=0The interesting thing: Not all of these are Linux. In fact some report Windows. Mayhap some Wine users? Some quick and dirty stats using sort and uniq in the following spoiler. Yes, there are more GPUs then users. I removed that GPU count filter above and some have SLI or whatever:

Spoiler, click me

- 26 "distributionName": "Unknown distribution or Windows"

- 1 "distributionName": "Debian GNU/Linux"

- 2 "distributionName": "elementary OS"

- 1 "distributionName": "Gentoo"

- 10 "distributionName": "Linux Mint"

- 2 "distributionName": "Ubuntu"

- 1 "name": "Core i3-3220"

- 4 "name": "Core i5-3570"

- 1 "name": "Core i5-4440S"

- 1 "name": "Core i5-4670"

- 1 "name": "Core i5-4690"

- 1 "name": "Core i5-6600"

- 2 "name": "Core i5-7500"

- 1 "name": "Core i7-3770K"

- 2 "name": "Core i7-4770"

- 1 "name": "Core i7-4770K"

- 1 "name": "Core i7-5820K"

- 1 "name": "Core i7-5960X"

- 2 "name": "Core i7-6700K"

- 3 "name": "Core i7-6800K"

- 1 "name": "Core i7-7700"

- 4 "name": "Core i7-7700K"

- 4 "name": "Core i7 X 980"

- 1 "name": "Genuine 0000"

- 1 "name": "FX-8350 Eight-Core Processor"

- 1 "name": "Phenom II X6 1090T Processor"

- 6 "name": "Ryzen 5 1600X Six-Core Processor"

- 1 "name": "Ryzen 7 1700X Eight-Core Processor"

- 1 "name": "Ryzen 7 1800X Eight-Core Processor"

- 8 "chipName": "GeForce GTX 1060 6GB"

- 8 "chipName": "GeForce GTX 1070"

- 6 "chipName": "GeForce GTX 1080"

- 9 "chipName": "GeForce GTX 1080 Ti"

- 1 "chipName": "GeForce GTX 580"

- 1 "chipName": "GeForce GTX 660"

- 2 "chipName": "GeForce GTX 980"

- 5 "chipName": "GeForce GTX 980 Ti"

- 4 "chipName": "GeForce GTX TITAN"

- 1 "chipName": "Radeon R9 Fury Series"

- 1 "driverVersion": "16.50.2011-161223"

- 1 "driverVersion": "373.06"

- 1 "driverVersion": "375.20"

- 1 "driverVersion": "375.39"

- 1 "driverVersion": "375.66"

- 4 "driverVersion": "378.09"

- 2 "driverVersion": "378.13"

- 1 "driverVersion": "381.22"

- 2 "driverVersion": "381.65"

- 2 "driverVersion": "382.05"

- 2 "driverVersion": "382.13"

- 2 "driverVersion": "382.33"

- 3 "driverVersion": "382.53"

- 4 "driverVersion": "384.59"

- 1 "driverVersion": "384.69"

- 1 "driverVersion": "384.76"

- 3 "driverVersion": "385.28"

- 11 "driverVersion": "385.41"

- 1 "driverVersion": "388"

- 1 "driverVersion": "388.13"

This is seriously lacking some AMD CPUs! Anyway. I got some data to compare and I'm happy now :) Feel free to play with this, too. For the lazy.. https://pastebin.com/1zHSJKCR

Disclaimer: This is not hacking. It's a public, keyless (but undocumented) API. (I didn't even try the other announced but probably mean Access-Control-Methods: POST, DELETE, PATCH).

0 Likes

How to set, change and reset your SteamOS / Steam Deck desktop sudo password

How to set, change and reset your SteamOS / Steam Deck desktop sudo password How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

Oh and the name doesn't mean anything but coincidentally could be pronounced as "Buttery" which suits me just fine.

See more from me