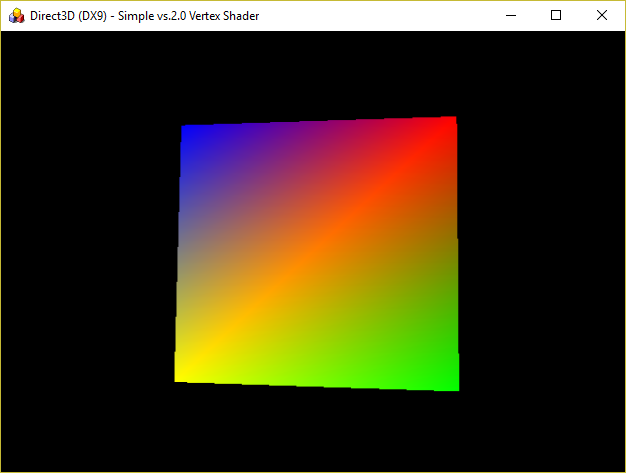

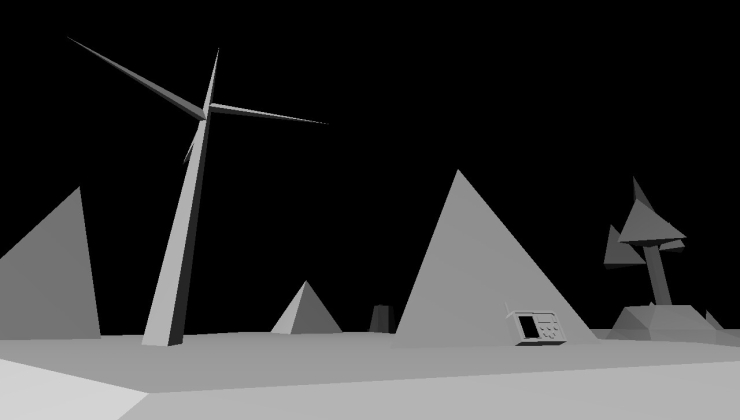

The developer of VK9, another rather interesting compatibility layer has advanced further with the announcement of another completed milestone.

Much like DXVK, it aims to push Direct3D over to Vulkan, while DXVK focuses on D3D11 and D3D10 the VK9 project is fixed on D3D9.

Writing about hitting the 29th milestone, the developer said this on their blog:

VK9 has reached it's 29th milestone. Reaching this milestone required further shader enhancements including support for applications which set a vertex shader but no pixel shader. In addition to this there are a number of fixes including proper NOOVERWRITE support which fixed the graphical corruption in UT99. This release also no longer depends on the push descriptor extension so VK9 should now be compatible with the closed source AMD driver. New 32bit and 64bit builds are available on Github.

Always fun to watch these projects progress, give it another year and it will be exciting to see what we can do with it. They have quite a few milestones left to achieve, which you can find on the Roadmap.

Find it on GitHub.

I wish Valve support this...

This project can give Proton the proper retro compatibility/performance for heavy weight DX9 games.

Are there any heavy weight DX9 games?

I believe a lot of Blizzard games are DX9, StarCraft 2 for instance.

I wish Valve support this...

This project can give Proton the proper retro compatibility/performance for heavy weight DX9 games.

Are there any heavy weight DX9 games?

There are a ton of them.

It was the de facto API before DX11, but the biggest one that comes to mind is the original Skyrim.

As already mentioned, loads of Triple AiAiAi (hat tip to Jim Sterling) games run on dx9, even those that came out after dx10 became available.

Assassin's Creed 1 has a dx10 mode and a dx9 fallback; but the next 3 games in the series are all dx9. GTA IV + its expansion packs are also dx9.

Yeah wine / proton actual dx9 implementation is very heavy specially with various unreal engine 3 games, need for speed hot pursuit 2010, skyrim vanilla and many others

Normally this games dont use more than 2 cores at max (in some rare cases use more cores but are few compared with regular dx9 titles)

For before reason ryzen dont work good with this type of games, for this reason have core i3 8350K 5.0ghz / 215 single thread cinebench r15

Without forget i put more attention on older titles than newer because older titles lack of tests compared newer games

Another thing high single thread cpu in this games give better fps min

^_^

Last edited by mrdeathjr on 17 Dec 2018 at 8:59 pm UTC

And I suppose old games like Still Life can finally work...

And Crysis. Don't forget Crysis.. Try to play that game via Proton with a modest hardware!

It's highly subjective. In general, humans perceive anything over 25/30 FPS as "continuous" and anything over 60 FPS as "smooth" but most can distinguish between 30 and 60 FPS and quite a few can recognise changes between 60 and 120 FPS. Above that, things get extremely subjective and most people can't see any difference.

I can tell the difference on my desktop between 144 and 120Hz. It shouldn't be that different but its night and day to me. So when people claim to be able to notice changes between even higher refresh rates, I'm not so doubtful...

This is why I'm excited about Freesync finally landing. I've never tried it as I'm Linux only and this might be the one thing that could fool my perception of frame rate.

It's highly subjective. In general, humans perceive anything over 25/30 FPS as "continuous" and anything over 60 FPS as "smooth" but most can distinguish between 30 and 60 FPS and quite a few can recognise changes between 60 and 120 FPS. Above that, things get extremely subjective and most people can't see any difference.

I can tell the difference on my desktop between 144 and 120Hz. It shouldn't be that different but its night and day to me. So when people claim to be able to notice changes between even higher refresh rates, I'm not so doubtful...

This is why I'm excited about Freesync finally landing. I've never tried it as I'm Linux only and this might be the one thing that could fool my perception of frame rate.

If it's really "night and day" then it might be that your display simply behaves very differently on those two frequencies or that you really have not performed a blind comparison.

If it's really "night and day" then it might be that your display simply behaves very differently on those two frequencies or that you really have not performed a blind comparison.

Possibly.

It was a unforced blind test of sorts. During a kernel update I lost the 144Hz mode and my monitor automatically dropped to 120Hz. At the time I noticed the desktop didn't feel as fluid, after rolling back to an older kernel… I was once again surprised at the difference.

I'm using a 1440p 27” monitor and those extra 24Hz make the cursor fluidity so much smoother its very evident.

I have often seen Linux ports or games running under Wine reduce performance by double digit FPS and/or % and people hailing that as acceptable since performance is still good and they may have an otherwise great point.

But if running a game under Wine reduces the FPS say from 150 to 100 or from 200 to 150 the general public will tend to perceive that as an utter failure and totally unacceptable. It will dissuade them from switching and the Linux marketshare will stay low.

Perception is everything. So it is crucial to get Linux performance as near to Windows performance as possible,if it can be faster even better.

Edit:

If I remember the numbers correctly, The Witcher 2 ports performance was bad enough that it is a way way bigger performance loss than what the general public would accept.

Also another attitude I have sometimes seen is "Oh it is fine that game is not DX11 exclusive, its DX9 mode works fine under Wine" neither the general public nor hardcore gamers share that attitude. They think: "Why should I switch to Linux if that means giving up eyecandy or features?".

Which is why projects such as DXVK are so important.

Afaik, the human eyes cannot percept any change above 30 fps.

It's highly subjective. In general, humans perceive anything over 25/30 FPS as "continuous" and anything over 60 FPS as "smooth" but most can distinguish between 30 and 60 FPS and quite a few can recognise changes between 60 and 120 FPS. Above that, things get extremely subjective and most people can't see any difference.

Dude no, that's absolutely not true. If you're talking about watching movies you might be right, but the extra responsiveness and smoothness you get from higher frame rates when gaming is *extremely* noticeable. The difference between 60hz and 120hz when gaming is MASSIVE. I can say that from personal experience and the testimony of everyone I know of who've tried a 120hz monitor. If you disagree, just try playing Counter Strike on a PC with a mouse and moving your crosshair back and forth quickly. If you honestly can't tell the difference at that point then you must have some kind of medical condition, or just terrible eye sight. I would consult a doctor (or optician, respectively.)

I found the jump from 120hz to 165hz very noticeable as well, although less so than 60 to 120. In my uneducated opinion, the difference in smoothness in some situations (like quickly turning 180 degrees in a first person shooter) will probably be somewhat noticeable up to around 240hz, maybe even further. I'd have to try it myself to be sure.

(Also if your entire comment was about *seeing* a difference, not *feeling* a difference while gaming, then I apologize in advance. A high frame rate is much less important when you're just watching the screen and not interacting in any way.)

It's highly subjective. In general, humans perceive anything over 25/30 FPS as "continuous" and anything over 60 FPS as "smooth" but most can distinguish between 30 and 60 FPS and quite a few can recognise changes between 60 and 120 FPS. Above that, things get extremely subjective and most people can't see any difference.

Dude no, that's absolutely not true. If you're talking about watching movies you might be right, but the extra responsiveness and smoothness you get from higher frame rates when gaming is *extremely* noticeable. The difference between 60hz and 120hz when gaming is MASSIVE. I can say that from personal experience and the testimony of everyone I know of who've tried a 120hz monitor. If you disagree, just try playing Counter Strike on a PC with a mouse and moving your crosshair back and forth quickly. If you honestly can't tell the difference at that point then you must have some kind of medical condition, or just terrible eye sight. I would consult a doctor (or optician, respectively.)

I found the jump from 120hz to 165hz very noticeable as well, although less so than 60 to 120. In my uneducated opinion, the difference in smoothness in some situations (like quickly turning 180 degrees in a first person shooter) will probably be somewhat noticeable up to around 240hz, maybe even further. I'd have to try it myself to be sure.

(Also if your entire comment was about *seeing* a difference, not *feeling* a difference while gaming, then I apologize in advance. A high frame rate is much less important when you're just watching the screen and not interacting in any way.)

I'm not speaking for myself. As I said in the first sentence, it's highly subjective; I can absolutely both see and feel the difference between 60 and 120Hz/FPS. If you look around at blind tests and consumer reports on monitors however, you'll see that a surprising amount of people don't notice any difference between a 60Hz and a 120Hz monitor, even in gaming tests.

Again "quite a few can recognise changes between 60 and 120 FPS", you and I included, but it's not everyone who can.

P.S. A fun anecdotal video is one from Linus Tech Tips ([4K Gaming is Dumb](https://www.youtube.com/watch?v=ehvz3iN8pp4)) where even some of their people couldn't spot any difference between 60/144/240Hz whilst playing Doom 2016.

P.S. A fun anecdotal video is one from Linus Tech Tips ([4K Gaming is Dumb](https://www.youtube.com/watch?v=ehvz3iN8pp4)) where even some of their people couldn't spot any difference between 60/144/240Hz whilst playing Doom 2016.

Totally agree. Refresh rate > resolution. And optimum today is indeed 2560 x 1440 / 144 Hz (with adaptive sync and LFC). Hopefully Linux will support that soon.

Last edited by Shmerl on 14 Jan 2019 at 4:04 am UTC

P.S. A fun anecdotal video is one from Linus Tech Tips ([4K Gaming is Dumb](https://www.youtube.com/watch?v=ehvz3iN8pp4)) where even some of their people couldn't spot any difference between 60/144/240Hz whilst playing Doom 2016.

Totally agree. Refresh rate > resolution. And optimum today is indeed 2560 x 1440 / 144 Hz (with adaptive sync and LFC). Hopefully Linux will support that soon.

I'm running 100Hz with G-Sync enabled (on 3440x1440) and it's working fine for the most part; a few games need a helping hand, but it's mostly smooth sailing. Are you referring more to an available open / AMD solution or is 100Hz really the limit?

I'm running 100Hz with G-Sync enabled (on 3440x1440) and it's working fine for the most part; a few games need a helping hand, but it's mostly smooth sailing. Are you referring more to an available open / AMD solution or is 100Hz really the limit?

GPUs simply aren't powerful enough to run games at something like 144 Hz at 4K. Video above makes this point quite well. 2560 x 1440 / 144 Hz matches current generation hardware, at least if we are talking about single GPU solution.

I'm running 100Hz with G-Sync enabled (on 3440x1440) and it's working fine for the most part; a few games need a helping hand, but it's mostly smooth sailing. Are you referring more to an available open / AMD solution or is 100Hz really the limit?

GPUs simply aren't powerful enough to run games at something like 144 Hz at 4K. Video above makes this point quite well. 2560 x 1440 / 144 Hz matches current generation hardware, at least if we are talking about single GPU solution.

Absolutely true for 4K, but 2.5K (or whatever one chooses to call 3440x1440) at 100Hz is manageable for many games (though 144Hz would be pushing it), as long as they're not too graphically/computationally expensive; luckily, the only one that's far from the mark in my library is "Total War: Warhammer", which doesn't absolutely require 100Hz anyway.

though 144Hz would be pushing it

That's where adaptive sync should be helpful. Let's say you have monitor sync range 40 - 144 Hz. So anything in the range of 40 - 144 fps should be running smoothly.

And then there is also LFC (low framerate compensation). You should try getting monitors with that. It basically means that monitor runs with double framerate to prevent tearing. I.e. let's say your game produces 30 fps which is below adaptive sync range. The monitor will run at 60 Hz (which is already in range). So that covers framerates between 20 and 40 fps. Anything below 20 fps is unplayable anyway, so not an issue.

Here is a good table I've found recently: https://www.amd.com/en/products/freesync-monitors

Filter by resolution, sync range and LFC.

Hopefully all this will be supported on Linux this year.

Last edited by Shmerl on 14 Jan 2019 at 4:50 pm UTC

though 144Hz would be pushing it

That's where adaptive sync should be helpful. Let's say you have monitor sync range 40 - 144 Hz. So anything in the range of 40 - 144 fps should be running smoothly.

[...]

Hopefully all this will be supported on Linux this year.

G-Sync already seems to do this quite well for me; I was actually referring to the FPS, not the Hz - sorry for the mess-up :)

It's highly subjective. In general, humans perceive anything over 25/30 FPS as "continuous" and anything over 60 FPS as "smooth" but most can distinguish between 30 and 60 FPS and quite a few can recognise changes between 60 and 120 FPS. Above that, things get extremely subjective and most people can't see any difference.

Dude no, that's absolutely not true. If you're talking about watching movies you might be right, but the extra responsiveness and smoothness you get from higher frame rates when gaming is *extremely* noticeable. The difference between 60hz and 120hz when gaming is MASSIVE. I can say that from personal experience and the testimony of everyone I know of who've tried a 120hz monitor. If you disagree, just try playing Counter Strike on a PC with a mouse and moving your crosshair back and forth quickly. If you honestly can't tell the difference at that point then you must have some kind of medical condition, or just terrible eye sight. I would consult a doctor (or optician, respectively.)

I found the jump from 120hz to 165hz very noticeable as well, although less so than 60 to 120. In my uneducated opinion, the difference in smoothness in some situations (like quickly turning 180 degrees in a first person shooter) will probably be somewhat noticeable up to around 240hz, maybe even further. I'd have to try it myself to be sure.

(Also if your entire comment was about *seeing* a difference, not *feeling* a difference while gaming, then I apologize in advance. A high frame rate is much less important when you're just watching the screen and not interacting in any way.)

I'm not speaking for myself. As I said in the first sentence, it's highly subjective; I can absolutely both see and feel the difference between 60 and 120Hz/FPS. If you look around at blind tests and consumer reports on monitors however, you'll see that a surprising amount of people don't notice any difference between a 60Hz and a 120Hz monitor, even in gaming tests.

Again "quite a few can recognise changes between 60 and 120 FPS", you and I included, but it's not everyone who can.

P.S. A fun anecdotal video is one from Linus Tech Tips ([4K Gaming is Dumb](https://www.youtube.com/watch?v=ehvz3iN8pp4)) where even some of their people couldn't spot any difference between 60/144/240Hz whilst playing Doom 2016.

I'm sure you can get people to say they don't notice a difference in *certain circumstances* - A picture of a gray rock will look very similar whether or not the image har color. If you set up blind tests using a picture of a gray rock, I'm sure you'd get numbers saying lots of people don't notice the difference between grayscale and color images. Playing certain games (like a slow paced strategy game or playing with a controller) will feel very similar on a 60hz and a 120hz monitor.

But put somebody in front of a sufficiently strong PC with a mouse and a first person shooter and the difference between 60hz and 120hz will be as stark as the difference between a grayscale and color image of a carnival.

How to set, change and reset your SteamOS / Steam Deck desktop sudo password

How to set, change and reset your SteamOS / Steam Deck desktop sudo password How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

See more from me