Valve developer Pierre-Loup Griffais mentioned on Twitter, about a new Mesa shader compiler for AMD graphics named "ACO" and they're calling for testers.

In the longer post on Steam, it goes over a brief history about Valve sponsoring work done by open-source graphics driver engineers, with it all being "very successful". The team has grown and they decided to go in a different direction with their work.

To paraphrase and keep it short and to the point, currently the OpenGL and Vulkan AMD drivers use a shader compiler that's part of the LLVM project. It's a huge project, it's not focused on gaming and it can cause issues. So, they started working on "ACO" with a focus on good results for shader generation in games and compile speed.

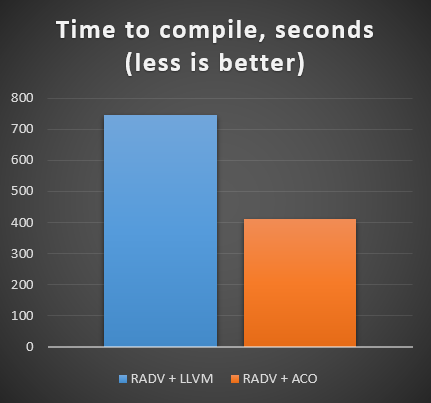

It's not yet finished, but the results are impressive as shown:

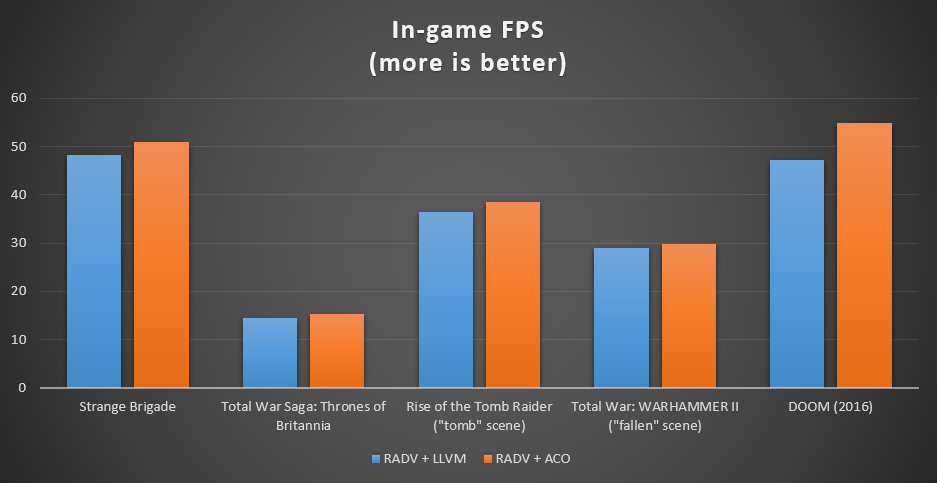

That is quite an impressive decrease in compile time! They expect to be able to improved that further eventually too, as it's currently only handling "pixel and compute shader stages". Valve also included some gaming results as well. Not quite as impressive when compared to the above perhaps, but every single bit of performance they can squeeze in is great:

With more detailed performance testing info available here. Now that it's looking pretty good, being stable in many games and seeing a reduction of stuttering they're looking for wider testing and feedback. Packages for Arch Linux should be ready later today, with Valve looking into a PPA for Ubuntu too. Interested in testing? See this forum post on Steam.

You can see the full post about it on Steam and more details on the Mesa-dev mailing list entry here. The code can also be viewed on GitHub.

This comes only recently after Valve released a statement about remaining "committed to supporting Linux as a gaming platform" as well as funding work on KWin. Really great to see all this!

I'm loving their commitment more than anything. Most companies would have given up by now.

It probably helps, that Valve is a private company, so they can work on long term investments. Public ones are pressured by external investors to rip something off the market quick, and not to care about long term progress.

Last edited by Shmerl on 4 Jul 2019 at 1:05 am UTC

Next build (around christmas), if AMD as something to offer comparable to the GTX 1660ti (performance, price and TDP), I might give another chance to AMD in another brand (Gigabyte or Sapphire).Don't pass off the ASUS cards. Mine were always good.

This is fantastic! This is really just cutting straight to the heart of gaming issues on Linux, tackling the real hard problems like improving minimal frame rates and eliminating stutter. This will have such a positive impact on gaming performance under Linux. Of course, it's just for AMD GPUs. NVIDIA, getting the hint yet? Open source your drivers if you want some of the open source love!

I can agree that it's good to involve the community and prefer things to be open source but in this particular instance would Nvidia really benefit from implementing the idea behind this mesa shader branch? Valve got these good results because they had been using LLVM as their compiler and, like their post says since it's a general purpose compiler and overall affected by commits from people with other use cases, it didn't make sense to keep using it. But Nvidia's proprietary driver very likely doesn't incorporate LLVM and has probably had a gaming specialized shader compiler from the start.

I get the feeling this is an instance of Valve closing a gap between the open source AMD drivers and Nvidia's proprietary drivers that has existed from the start and not them using some novel new method that surpasses Nividia's implementation.

But Nvidia's proprietary driver very likely doesn't incorporate LLVM and has probably had a gaming specialized shader compiler from the start.

Nvidia's compiler is using llvm if I remember correctly. At least in some cases.

Their llvm backend: https://github.com/llvm-mirror/llvm/tree/master/lib/Target/NVPTX

Not sure if their new blob Vulkan compiler is using it though.

UPDATE:

Related: https://www.phoronix.com/scan.php?page=article&item=nvidia-396-nvvm

See also: https://docs.nvidia.com/cuda/nvvm-ir-spec/index.html

NVVM IR is a compiler IR (internal representation) based on the LLVM IR. The NVVM IR is designed to represent GPU compute kernels (for example, CUDA kernels). High-level language front-ends, like the CUDA C compiler front-end, can generate NVVM IR. The NVVM compiler (which is based on LLVM) generates PTX code from NVVM IR.

So, likely they do use llvm to translate PTX into machine code.

Last edited by Shmerl on 4 Jul 2019 at 4:52 am UTC

This is fantastic! This is really just cutting straight to the heart of gaming issues on Linux, tackling the real hard problems like improving minimal frame rates and eliminating stutter. This will have such a positive impact on gaming performance under Linux. Of course, it's just for AMD GPUs. NVIDIA, getting the hint yet? Open source your drivers if you want some of the open source love!

I can agree that it's good to involve the community and prefer things to be open source but in this particular instance would Nvidia really benefit from implementing the idea behind this mesa shader branch? Valve got these good results because they had been using LLVM as their compiler and, like their post says since it's a general purpose compiler and overall affected by commits from people with other use cases, it didn't make sense to keep using it. But Nvidia's proprietary driver very likely doesn't incorporate LLVM and has probably had a gaming specialized shader compiler from the start.

I get the feeling this is an instance of Valve closing a gap between the open source AMD drivers and Nvidia's proprietary drivers that has existed from the start and not them using some novel new method that surpasses Nividia's implementation.

You're probably correct on all those points. But gradyvuckovic's remark was more about Nvidia realizing that a lot of good things can come out an open-source ecosystem.

You're probably correct on all those points. But gradyvuckovic's remark was more about Nvidia realizing that a lot of good things can come out an open-source ecosystem.

Nvidia doesn't care. They are like Oracle in this sense. They deal with open source very reluctantly, because they totally don't get the point of it.

Last edited by Shmerl on 4 Jul 2019 at 4:54 am UTC

I think what Oracle gets is that, whatever the point of open source is, it probably isn't getting Larry Ellison an even longer yacht, so they are deeply suspicious.You're probably correct on all those points. But gradyvuckovic's remark was more about Nvidia realizing that a lot of good things can come out an open-source ecosystem.

Nvidia doesn't care. They are like Oracle in this sense. They deal with open source very reluctantly, because they totally don't get the point of it.

I think what Oracle gets is that, whatever the point of open source is, it probably isn't getting Larry Ellison an even longer yacht, so they are deeply suspicious.

Heh, it reminds me this video: [https://www.youtube.com/watch?v=-zRN7XLCRhc&t=33m01s](https://www.youtube.com/watch?v=-zRN7XLCRhc&t=33m01s) (starts at 33:01).

Last edited by Shmerl on 4 Jul 2019 at 8:21 am UTC

But Nvidia's proprietary driver very likely doesn't incorporate LLVM and has probably had a gaming specialized shader compiler from the start.It actually does, but for a different purpose. AFAIK they use LLVM for the same purpose that Mesa has NIR for, while the backend that targets their various GPU ISAs lives entirely inside the driver (so it would be SPIR-V -> LLVM -> (some IR) -> binary). AMD has a GCN backend living in LLVM, so for them it's SPIR-V -> NIR -> LLVM -> binary.

Well, I bought an MSI Armor RX580 8G a few months back, and I actually avoid playing anything too GPU-heavy because of the hellish noise it makes, so I wouldn't bet on it. There's also an unhealthy, intermittent rattle coming from the bearings already. This is probably the first and the last MSI product I'll ever buy.Thing is, when you buy a GPU, you don't buy it thinking it will break in the next 6 months. This one had really cheap fans that had bearing noise problems. None of my GTX650 (ASUS), GTX750 ti (Gigabyte) and GTX960 (Gigabyte) had these problems and I never paid for premium models. There's a minimum quality that must be respected. Not going to buy any GPU from MSI ever again.

I don't believe is something with MSI as a whole, I'm running a MSI RX580 Gaming X for 2 years now and haven't had any problems with it.

Maybe it was a bad unit?

Weird thing is, I couldn't find a single review that had bad things to say about the heatsink back when I bought it. Now there seems to be a new version of the card/heatsink though, so maybe they made it better. Doesn't help me or Mohandevir or course.

Well, I bought an MSI Armor RX580 8G a few months back, and I actually avoid playing anything too GPU-heavy because of the hellish noise it makes, so I wouldn't bet on it. There's also an unhealthy, intermittent rattle coming from the bearings already. This is probably the first and the last MSI product I'll ever buy.Thing is, when you buy a GPU, you don't buy it thinking it will break in the next 6 months. This one had really cheap fans that had bearing noise problems. None of my GTX650 (ASUS), GTX750 ti (Gigabyte) and GTX960 (Gigabyte) had these problems and I never paid for premium models. There's a minimum quality that must be respected. Not going to buy any GPU from MSI ever again.

I don't believe is something with MSI as a whole, I'm running a MSI RX580 Gaming X for 2 years now and haven't had any problems with it.

Maybe it was a bad unit?

Weird thing is, I couldn't find a single review that had bad things to say about the heatsink back when I bought it. Now there seems to be a new version of the card/heatsink though, so maybe they made it better. Doesn't help me or Mohandevir or course.

Exactly my experience too. And not long after that, Newegg got totally invaded with MSI Armor RX580 refurbished units (same model than mine). A really bad product from MSI, unfortunately.

Can't really say that the much more expensive MSI Gaming X model of my RX 480 is free of issues either - I mean, the heat sink is very strong and the card is very quiet under full load, but I had to re-seat the cooler and re-paste the card three times by now because it randomly lost die contact for no reason.

:O

Can't say for Nvidia, but it seems making a reliable AMD card is a not a science MSI masters...

Last edited by Mohandevir on 4 Jul 2019 at 5:45 pm UTC

Can't really say that the much more expensive MSI Gaming X model of my RX 480 is free of issues either - I mean, the heat sink is very strong and the card is very quiet under full load, but I had to re-seat the cooler and re-paste the card three times by now because it randomly lost die contact for no reason.

I had an Asus ROG Matrix R9 290 that had the exact same problem. The heatsink was loose and did not put enough pressure onto the GPU. I had to put washers under the screws to mitigate the problem but still had to reseat the heatsink every now and then. The card was obnoxiously loud despite being marketed as a premium model. It was getting decent reviews (I honestly suspect golden review samples, because users were not happy with their cards) and my experience with Asus had been good in the past, but that card was a big letdown.

My hypothethis is that most of these manufacturers design their stuff for Nvidia GPU's first and then reuse the same parts for AMD GPU's but slight differences in GPU package dimensions, especially height can cause problems. I think this is why Sapphire gets it right most of the time because they work with AMD exclusively.

I suspect nvidia by now also simply can't open source their driver code. Too much third party in there. AMD went through that pain a while ago because they knew it would be the better option long-term than trying to fix up fglrx.

Not the current code, sure. But they can totally stop being jerks to Nouveau, and back it with their resources to match their blob in features and performance. They just don't care. It's clear it's not about issues that AMD had with tedious process of replacing what can't be opened.

Also, consider Nvidia's anti-competitive attitude. They profit by charging more for blobs that unlock features based on the market. If the driver would be open, everyone would be able to use their hardware to full potential, without any middleman dictating who can do it and charging them for it. It's likely the main reason they don't want Nouveau to succeed.

Last edited by Shmerl on 4 Jul 2019 at 7:24 pm UTC

I bought few months ago a Sapphire Radeon RX 590 Nitro+, and is remarkably cool and quiet with good performance too, at least for the money.

I'm not sure if it works on SteamOS though, if you're still using it. When I bought mine I had to add a custom kernel since the existing one was too old for the card.

Some details about this particular card:

https://www.pcworld.com/article/3323380/sapphire-radeon-rx-590-nitro-review.html

At the time when I bought it, it was the best card for the money from the red camp. I think the Nvidia equivalent for it is GTX 1660ti.

@Mohandevir

I bought few months ago a Sapphire Radeon RX 590 Nitro+, and is remarkably cool and quiet with good performance too, at least for the money.

I'm not sure if it works on SteamOS though, if you're still using it. When I bought mine I had to add a custom kernel since the existing one was too old for the card.

Some details about this particular card:

https://www.pcworld.com/article/3323380/sapphire-radeon-rx-590-nitro-review.html

At the time when I bought it, it was the best card for the money from the red camp. I think the Nvidia equivalent for it is GTX 1660ti.

SteamOS is not a problem. I use it on my secondary build (for my kids) along with an older GPU (where my actual GTX960 will be). Since the newer stuff is on my personal rig and I need more bleeding edge drivers, I use my "Steambuntu" setup. :)

Thanks for the input.

I made a PPA for Ubuntu, please help me test it! https://launchpad.net/~ernstp/+archive/ubuntu/mesaaco

Froze at Ubuntu login Screen lol

I made a PPA for Ubuntu, please help me test it! https://launchpad.net/~ernstp/+archive/ubuntu/mesaaco

Froze at Ubuntu login Screen lol

I don't think you should be using experimental Mesa to replace system one. It's always a bad idea. Just build it and load on demand only with games that you test, without touching system packages that affect DE experience.

Last edited by Shmerl on 4 Jul 2019 at 7:57 pm UTC

How to set, change and reset your SteamOS / Steam Deck desktop sudo password

How to set, change and reset your SteamOS / Steam Deck desktop sudo password How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

See more from me