Today AMD formally revealed the next-generation Radeon GPUs powered by the RDNA 2 architecture and it looks like they’re going to thoroughly give NVIDIA a run for your money.

What was announced: Radeon RX 6900 XT, Radeon RX 6800 XT, Radeon 6800 with the Radeon RX 6800 XT looking like a very capable GPU that sits right next to NVIDIA's 3080 while seeming to use less power. All three of them will support Ray Tracing as expected with AMD adding a "high performance, fixed-function Ray Accelerator engine to each compute unit". However, we're still waiting on The Khronos Group to formally announce the proper release of the vendor-neutral Ray Tracing extensions for Vulkan which still aren't finished (provisional since March 2020) so for now DirectX RT was all they mentioned.

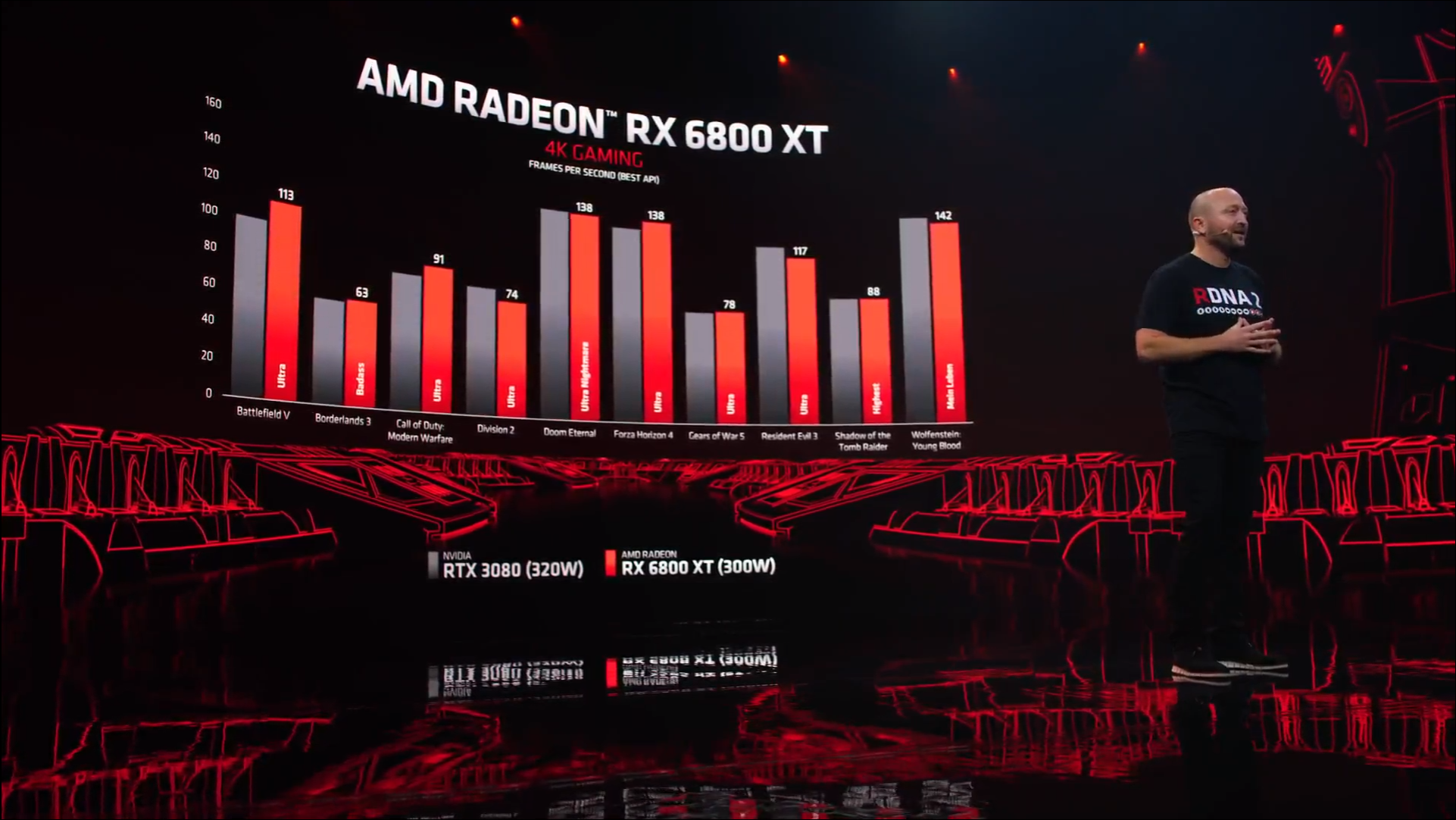

Part of the big improvement in RDNA 2 comes from what they learned with Zen 3 and their new "Infinity Cache", which is a high-performance, last-level data cache they say "dramatically" reduces latency and power consumption while delivering higher performance than previous designs. You can see some of the benchmarks they showed in the image below:

As always, it's worth waiting on independent benchmarks for the full picture as both AMD and NVIDIA like to cherry-pick what makes them look good of course.

Here's the key highlight specifications:

| RX 6900 XT | RX 6800 XT | RX 6800 | |

|---|---|---|---|

| Compute Units | 80 | 72 | 60 |

| Process | TSMC 7nm | TSMC 7nm | TSMC 7nm |

| Game clock (MHz) | 2,015 | 2,015 | 1,815 |

| Boost clock (MHz) | 2,250 | 2,250 | 2,105 |

| Infinity Cache (MB) | 128 | 128 | 128 |

| Memory | 16GB GDDR6 | 16GB GDDR6 | 16GB GDDR6 |

| TDP (Watt) | 300 | 300 | 250 |

| Price (USD) | $999 | $649 | $579 |

| Available | 08/12/2020 | 18/11/2020 | 18/11/2020 |

You shouldn't need to go buying a new case either, as AMD say they had easy upgrades in mind as they built these new GPUs for "standard chassis" with a length of 267mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650W-750W power supplies.

There was a big portion of the event dedicated to DirectX which doesn’t mean much for us, but what we’ve been able to learn from the benchmarks shown is that they’re powerful cards and they appear to fight even NVIDIA’s latest high end consumer GPUs like the GeForce 3080. So not only are AMD leaping over Intel with the Ryzen 5000, they’re also now shutting NVIDIA out in the cold too. Incredible to see how far AMD has surged in the last few years. This is what NVIDIA and Intel have needed, some strong competition.

How will their Linux support be? You're probably looking at around the likes of Ubuntu 21.04 next April (or comparable distro updates) to see reasonable out-of-the-box support, thanks to newer Mesa drivers and an updated Linux Kernel but we will know a lot more once they actually release and can be tested.

As for what’s next? AMD confirmed that RDNA3 is well into the design stage, with a release expected before the end of 2022 for GPUs powered by RDNA3.

You can view the full event video in our YouTube embed below:

Direct Link

Feel free to comment as you watch as if you have JavaScript enabled it won't refresh the page.

Additionally if you missed it, AMD also recently announced (October 27) that they will be acquiring chip designer Xilinx.

Last edited by Shmerl on 28 Oct 2020 at 4:23 pm UTC

Too much talk about DX12. They said nothing about Vulkan so far...

While this is bothering me as well, they usually talk about DX "feature levels", because "DX 12 Ultimate" goes off better than "Vulkan 1.2.34103 release 3".

Last edited by Liam Dawe on 28 Oct 2020 at 4:30 pm UTC

If the driver breaks with a kernel update then one would assume that it's obvious that it is the kernel which breaks its own interface and not vice versa. The kernel doesn't provide any stable interface. Read about the latest breakage: https://lwn.net/Articles/827596/

We are definitely talking about different things then. AFAIK, Nvidia proprietary driver doesn't implement DRM api, so you are installing a piece of software that doesn't follow Linux design guidelines.

Regarding the problem you mentioned, the issue Nvidia has with each new release of the kernel is that some parts of the driver may get broken because the guts of the kernel may change from time to time, which means that if your driver is not mainlined you will have to take care of following and patching your driver in parallel to the kernel releases (there isn't any other way around). But still, mainlining your kernel driver doesn't mean in anyway that you cannot still provide your dkms driver for downloading and installing in older kernels (of course, the company in charge of the module will have to take care of adapting their code for older kernels if necessary). You can see an example of this with AMDGPU-PRO, where they include AMDGPU DRM as dkms for the kernel supported by Ubuntu LTS and Rhel versions.

Last edited by x_wing on 28 Oct 2020 at 4:42 pm UTC

Awesome presentation. But I think I just saw my wallet running out of the door screaming.

So did mine. The 6800 (non XT) is more than enough for my needs.

This is what an amd fanboy would say, not a conscious customer who evaluates a product properly. Nothing you mentioned is actually an evaluation of any aspect,

This is based on the actual testing of 50+ hardware pieces, more than 70 configurations, it is months of testing (and in fact, sometime, years of debugging).

All those tests in that page were driven by developers or under developer supervision, they are not automated. I was involved in all of them in several stages.

As a game developer you develop your software for a specific driver/hardware combo.

We don't develop for a specific driver/hardware combo. You're talking like a web developer from Internet Explorer 6 era.

The specific bug I talked about is a bug where Nvidia driver wrongly announces the hardware supports a feature that is not supported. That's unrelated to how the game is developed or not. A game written properly against the OpenGL standard would query the availability of the feature and would enable code the hardware does not support because the driver made a false statement. So we have to do some guesses to attempt to detect the faulty driver/hardware combinations to not trust what the driver says.

And outside of game development, I have seen Nvidia cards requiring to plug a screen on VGA port to stop the Nvidia driver to complain when using the HDMI port (while not being able to display something on that VGA port anyway). I have seen Nvidia cards displaying the early BIOS screen on one port and the operating system on another, requiring to unplug/replug the screen during the boot process to get continuous display. This has nothing to do with game development. Some cards just disconnect themselves from the PCIe bus with the proprietary driver, not with the open source driver. This has nothing to do with game development.

I had myself to write Xorg.conf things to make Nvidia stuff working on actual hardware, things people using AMD or Intel don't do since year 2008 or so. What a blast from the past!

The thing is that Nvidia products are like dragster: they can shave 1 second over their concurrent time on a straight line, you don't know what happens if you have to take turns, they are not meant to make yourself able to take your children to school or complete the Dakar Rally, and it may just explode in your face before starting the race.

[ 195.564010] NVRM: GPU at 0000:01:00.0 has fallen off the bus.True story.

Last edited by illwieckz on 28 Oct 2020 at 4:59 pm UTC

This is what an amd fanboy would say, not a conscious customer who evaluates a product properly. Nothing you mentioned is actually an evaluation of any aspect,

This is based on the actual testing of 50+ hardware pieces, more than 70 configurations, it is months of testing (and in fact, sometime, years of debugging).

As a game developer you develop your software for a specific driver/hardware combo.

We don't develop for a specific driver/hardware combo. You're talking like a web developer from Internet Explorer 6 era.

The specific bug I talked about is a bug where Nvidia driver wrongly announces the hardware supports a feature that is not supported. That's unrelated to how the game is developed or not. A game written properly against the OpenGL standard would query the availability of the feature and would enable code the hardware does not support because the driver made a false statement. So we have to do some guesses to attempt to detect the faulty driver/hardware combinations to not trust what the driver says.

And outside of game development, I have seen Nvidia cards requiring to plug a screen on VGA port to stop the Nvidia driver to complain when using the HDMI port. I have seen Nidia cards displaying the early BIOS screen on one port and the operating system on another, requiring to unplug/unplug the screen during the boot process to get continuous display. This has nothing to do with game development. Some cards just disconnect themselves from the PCIe bus with the proprietary driver, not the open one. This has nothing to do with game development.

I had myself to write Xorg.conf things to make Nvidia stuff working on actual hardware, things people using AMD or Intel don't do since year 2008 or so. What a blast from the past!

The thing is that Nvidia products are like dragster: they can shave 1 second over their concurrent time on a straight line, you don't know what happens if you have to take turns, they are not meant to make yourself able to take your children to school or complete the Dakar Rally, and it may just explode in your face before starting the race.

[ 195.564010] NVRM: GPU at 0000:01:00.0 has fallen off the bus.

True story.

aaaand you're still pretending amd drivers are near flawless and nvidia is garbage because you found issues. I can also link issues: https://gitlab.freedesktop.org/drm/amd/-/issues/929

be a guest for more: https://gitlab.freedesktop.org/drm/amd/-/issues. I never had to write xorg.conf to make my hardware work, nvidia-settings takes care of the settings. My nvidia card displays the BIOS and everything on the same screen at boot. Did your GPU "fallen of the bus"? Your hardware is probably started to fail or is not connected properly. There is a very high chance it's a PSU issue.

Game developers definitely target specific hardware, it's pointless to deny it. They literally mentioned that in the video from this article.

AMD drivers are and were not flawless, but i ran into a heap of problems with nvidia and their shitty drivers that effect the basic system's functionality even as i do not have an nvidia card in my computers than with the AMD drivers. To me these things are a lot more annoying than amd producing graphical glitches in games back 3-4 years ago.

Also your point about "ideology" earlier is clearly faulty. Even if you ignore all beneficial access to freer software, you get a lot of very practical (not legal) advantages too. It's simple even if you like nvidia hardware by the large tends to work better with linux the freer their drivers/software are. It's the same with printers too: the ones that have largely free drivers seem to always work better than the ones that don't.

I never had to write xorg.conf to make my hardware work, nvidia-settings takes care of the settings.

You said it: “nvidia-settings takes care of the settings”. You're experiencing Linux graphics like if you still lived in year 2004. That's not normal you have to use nvidia-settings. That's wrong you have to use it. Neither Intel or AMD hardware requires similar things. This has stopped on AMD side many year ago. The thing is: Nvidia is decade late in the race.

I never had

My nvidia card

Ok, you answer a “works for me” statement without taking risk to leave the comfort zone.

Do you understand you're building denial about months of actual and serious testing for the only reason you may have not tried enough to face a problem yourself?

Did your GPU "fallen of the bus"? Your hardware is probably started to fail or is not connected properly. There is a very high chance it's a PSU issue.

This was verified with multiple graphic cards of the same family, on multiple motherboards, on multiple PSU (I driven the test myself and tried out all the possible combinations), and you know what? The nouveau driver runs flawlessly on the same hardware (whatever the combination).

Game developers definitely target specific hardware, it's pointless to deny it. They literally mentioned that in the video from this article.

Targeting specific hardware or driver is only required to get some extra performance boost in specific situations. Outside of optional dedicated optimizations no one has to do it. And no one must have to target specific hardware or driver to prevent driver failures.

Targeting specific hardware and driver must remain optional, not a requirement. And you talk like if it was a requirement.

Maybe are you among those people who are afraid their game would not run outside of Nvidia like there was people being afraid to lose their favorite websites if they switched from IE to Firefox two decades ago?

If you really buy Nvidia hardware because of Nvidia making you believe you'll lose something you have, then you're victim of a racket.

Good job AMD!

Also, what's that "direct storage" thing? How does GPU supposed to support it?

At some point in the past they even integrated a 1Tb SSD in their GPU to get access to larger storage without being slowed down by the CPU and other components:

https://www.pcworld.com/article/3099964/amds-new-ssg-technology-adds-an-ssd-to-its-gpu.html

I guess that may be a variant of this that would still uses computer's SSD (so they don't have to ship it themselves, to reduce price) but in a way performance approach this. There was huge improvements in PCIe and specific AMD technologies for interconnecting things last years.

I guess that may be a variant of this that would still uses computer's SSD (so they don't have to ship it themselves, to reduce price) but in a way performance approach this. There was huge improvements in PCIe and specific AMD technologies for interconnecting things last years.

It's not OS dependent? I.e. would it work on Linux too?

Also your point about "ideology" earlier is clearly faulty. Even if you ignore all beneficial access to freer software, you get a lot of very practical (not legal) advantages too. It's simple even if you like nvidia hardware by the large tends to work better with linux the freer their drivers/software are. It's the same with printers too: the ones that have largely free drivers seem to always work better than the ones that don't.As a specific example of this - dma-buf based video capture in Wayland with OBS is vastly smoother performing than what can be achieved with the xcomposite based caputre in X11. Integration with the 'native' linux graphic stack becuase you have compatible licensing is a very real benefit.

And unless nvidia can drastically change up what they do, the integration problems are going to get worse as more stuff moves to wayland based environments. We already see this with the Firefox video decode acceleration depending on dma-buf.

Is it not "normal" to use a GUI to set display options instead of fiddling with the terminal? Give me a break...

You don't notice you talk about about something Intel and AMD users don't have to do since many years. They even don't think about it or don't know that exist because they have no need for it. Intel and AMD user don't need to set display option with vendor-specific tools. Period. They just use their desktop and their standard interfaces, being graphical or textual ones, whatever personal taste.

On Intel and AMD side, there is no need for hardware-specific intel-settings or amd-settings tool to set display options. Period. That mess ended years ago for people using free and open source drivers.

That's what some others have said in that thread: even people not caring about the mindset of open source ideology can find benefits in what open source model achieves on the technical side: the best integration they can get from hardware to software.

Nvidia is now multiple decade late on Linux integration, and things will get worst and worst, probably quicker than before as old technologies like Xorg starts to rot.

And if Nvidia wanted to go the open source route to fix that integration issue and avoid that software rotting issue, they are also decade late in the game. They are really in bad position in Linux's future, worst than anytime they been.

Last edited by illwieckz on 28 Oct 2020 at 6:15 pm UTC

Frankly, I don't appreciate the condescending tone in your reply to my original comment either, lunix. I take into account open source drivers in my purchasing decisions, you don't. There's no need to make me sound like I'm somehow an idiot for doing so, is there?

But you go on. You imply that my (until an hour ago) top-of-the-range AMD card is "entry level" and can't power a 4K screen... I... what? It runs everything at 4K, 60fps, full settings, so far. Actually, I guess it's much more than that, since I bought this card for VR and I get 90Hz on both 1440 x 1600 eyes. And since the AMD driver is so far ahead of the Nvidia driver for VR, I get async reprojection for a smoother overall experience. It's quiet too.

And you imply I'm an idiot for running X, on a single display, instead of Wayland on multiple displays too? Jesus, gimme a break. I don't know how to respond to that, so I won't.

My rtx 2080 delivers more performance with less watts than the average 5700xt.wait.. you've just compared a $1k product with a 400 bucks one? please check what pound for pound means.

I don't contest that the green cards are probably better right now. I'm just saying that at the same price AMD brings proper drivers and Nvidia doesn't. And that could be a defining factor for quite some people - without resorting to pure "ideology".

Example: I'm in the market for a mid-to-higher priced GPU with hassle-free out-of-the box Linux distro support. I know or at least heard of most of the things you've mentioned but my prerequisites for a purchase is general competitiveness of the product AND open-source support. And here is where AMD wins outright. Even though an Nvidia card could be marginally better at the same price (or not...)

How to set, change and reset your SteamOS / Steam Deck desktop sudo password

How to set, change and reset your SteamOS / Steam Deck desktop sudo password How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

See more from me