Today AMD formally revealed the next-generation Radeon GPUs powered by the RDNA 2 architecture and it looks like they’re going to thoroughly give NVIDIA a run for your money.

What was announced: Radeon RX 6900 XT, Radeon RX 6800 XT, Radeon 6800 with the Radeon RX 6800 XT looking like a very capable GPU that sits right next to NVIDIA's 3080 while seeming to use less power. All three of them will support Ray Tracing as expected with AMD adding a "high performance, fixed-function Ray Accelerator engine to each compute unit". However, we're still waiting on The Khronos Group to formally announce the proper release of the vendor-neutral Ray Tracing extensions for Vulkan which still aren't finished (provisional since March 2020) so for now DirectX RT was all they mentioned.

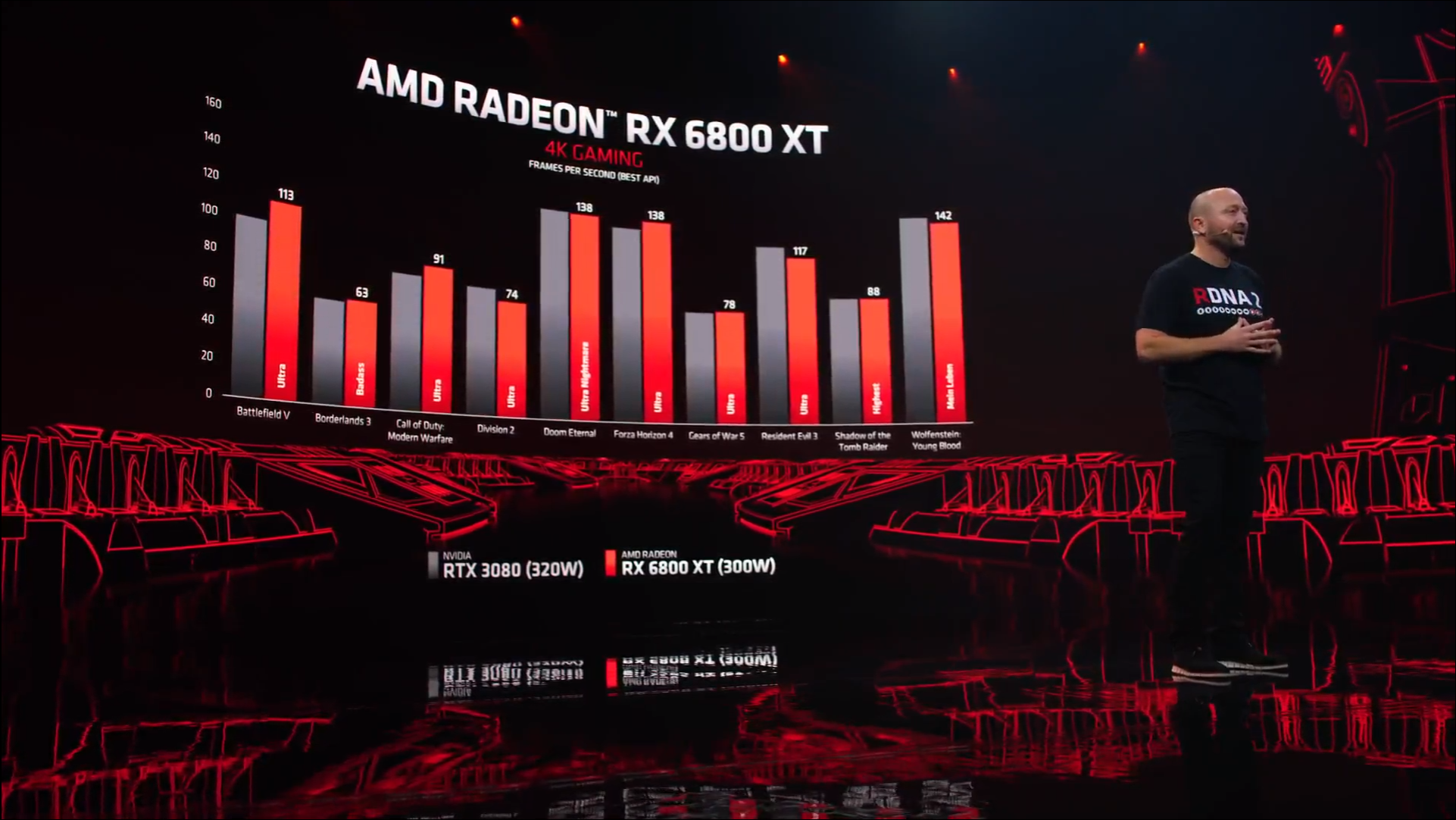

Part of the big improvement in RDNA 2 comes from what they learned with Zen 3 and their new "Infinity Cache", which is a high-performance, last-level data cache they say "dramatically" reduces latency and power consumption while delivering higher performance than previous designs. You can see some of the benchmarks they showed in the image below:

As always, it's worth waiting on independent benchmarks for the full picture as both AMD and NVIDIA like to cherry-pick what makes them look good of course.

Here's the key highlight specifications:

| RX 6900 XT | RX 6800 XT | RX 6800 | |

|---|---|---|---|

| Compute Units | 80 | 72 | 60 |

| Process | TSMC 7nm | TSMC 7nm | TSMC 7nm |

| Game clock (MHz) | 2,015 | 2,015 | 1,815 |

| Boost clock (MHz) | 2,250 | 2,250 | 2,105 |

| Infinity Cache (MB) | 128 | 128 | 128 |

| Memory | 16GB GDDR6 | 16GB GDDR6 | 16GB GDDR6 |

| TDP (Watt) | 300 | 300 | 250 |

| Price (USD) | $999 | $649 | $579 |

| Available | 08/12/2020 | 18/11/2020 | 18/11/2020 |

You shouldn't need to go buying a new case either, as AMD say they had easy upgrades in mind as they built these new GPUs for "standard chassis" with a length of 267mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650W-750W power supplies.

There was a big portion of the event dedicated to DirectX which doesn’t mean much for us, but what we’ve been able to learn from the benchmarks shown is that they’re powerful cards and they appear to fight even NVIDIA’s latest high end consumer GPUs like the GeForce 3080. So not only are AMD leaping over Intel with the Ryzen 5000, they’re also now shutting NVIDIA out in the cold too. Incredible to see how far AMD has surged in the last few years. This is what NVIDIA and Intel have needed, some strong competition.

How will their Linux support be? You're probably looking at around the likes of Ubuntu 21.04 next April (or comparable distro updates) to see reasonable out-of-the-box support, thanks to newer Mesa drivers and an updated Linux Kernel but we will know a lot more once they actually release and can be tested.

As for what’s next? AMD confirmed that RDNA3 is well into the design stage, with a release expected before the end of 2022 for GPUs powered by RDNA3.

You can view the full event video in our YouTube embed below:

Direct Link

Feel free to comment as you watch as if you have JavaScript enabled it won't refresh the page.

Additionally if you missed it, AMD also recently announced (October 27) that they will be acquiring chip designer Xilinx.

I did try to buy a 3080 on launch but no stop exists anywhere except for for %200 marked up models...

The 850 should handle the power draw of the 6900XT just fine

Why do people think they need a 850W PSU for a 300W card? I've always been confused by this thinking. Is your CPU drawing 500W?

The last time I had a ATI card(as they were called back then) it resulted in a "hardware manufacture error". Being boycotting them ever since.

If I had this policy, I would actually not being using computers right now because all brands have burnt me at some point. (basically bought all cpu/gpu and brands over the decades at one point or another).

Last edited by TheRiddick on 29 Oct 2020 at 10:39 am UTC

I thought that it is sensible to think that GamingOnLinux.com would focus on gaming

Now see, there's the root of all your problems. This site is not about GamingOnLinux, it's about GamingOnLinux. Linux is the main focus. And people who've deliberately chosen to game on an open platform like Linux, as opposed to closed platforms like Windows and MacOS, obviously consider openness a priority when choosing what hardware to buy. Otherwise why the fsck would we bother with Linux in the first place? If we only wanted pure performance like you claim, then we'd be gaming on Windows and arguing semantics on TenForums, yeah?

It's all a matter of ideology (like the Purple dude correctly tried to tell you but you obviously failed to listen) and yours is blinding you to these simple facts.

linux gaming is pretty much on life support. Proton keeps it alive but for how long? Valve created linux gaming because they saw it as a not-windows-but-kinda-works alternative

Not that wayland is going to make the linux desktop competitive with macos or windows when it barely competes with xorg...

Wtf? Birdie, is that you?

Linux will never get really popular if it won't get with the times and deliver actual value instead of advertizing ideologies.I'm sorry to disagree, but I am part of the people who think performance is not the only valuable metrics.

Linux, without these ideologies, is basically Windows. Right now, I honestly don't see the interest a user would have in moving from Windows to Linux without these ideologies, considering Linux is not really supported by companies outside of the server world.

Oh and these so-called ideologies do have concrete consequences in real life: for instance I can still run very old AMD GPUs thanks to AMD open sourcing at least their specifications at the time and the performance are still pretty good (considering the GPU). On the other hand, I recently revived a PC with an old NVIDIA GPU and wasn't able to install the official NVIDIA drivers because they decided not to support this card anymore, so the only fallback was nouveau, which is less then ideal 3D performance-wise. Another example is that AMD cards do work with Wayland right now, while it is still not possible with NVIDIA's. And another example is that I'm not afraid to upgrade my system because I know the AMD drivers are coming with the new Linux kernel. Oh and I like the fact that any developer can improve the AMD drivers now as some improvements in mesa can benefit the whole graphics stack.

To me, thinking Open Source is merely an ideology means you completely missed the point here. Open Source is a way to prevent monopolies by releasing control over the source code and letting anyone to read, modify, and run it.

And, please, don't say things like "For most people(>99%)" if you don't have at least one source to prove it. This statement is a bias that is but an extrapolation of your way of thinking: "if I think this is the best, then everyone must be thinking the same".

Last edited by Creak on 29 Oct 2020 at 12:28 pm UTC

This topic is also going into dangerous territory and no longer relevant to the article, other than that it's rather obvious that open source drivers really do matter to some people, it's not something to be dismissed, and will be a factor in purchasing decisions.You are so right about this. This comments thread looks more and more to look like a Phoronix forums thread

On a personal level, I've just bought my 5700XT and it plays every game I own at 4K with no hiccups (GOL Discord user, Michael, has a great series of videos demonstrating the 5700XT's 4K performance on their Youtube channel - all the vids from about April onwards are the 5700XT), but who doesn't love a bit more power and future proofing? And that Ray Tracing... that's going to be big in the coming months and years. Unlike PhysX, Ray Tracing is properly cross-platform (or will be soon) and I think it's going to take off in a pretty big way.

I might take a look at upgrading early next year, assuming the prices don't sky rocket thanks to mining (again).

I might take a look at upgrading early next year, assuming the prices don't sky rocket thanks to mining (again).

I've read somewhere, AMD are planning to introduce new cards specifically for mining, so it should be better than before.

The '499 USD' 3070 just hit the stores here at prices equivalent to 667* USD, so there is some room to compete.

*) calculated from DKK back to USD using todays currency rates.

Of course, maybe I'm just being ideological for prioritizing good integration with the rest of the ecosystem and valuing silly things like open source drivers over brand loyalty

Even something so simple as: how much power do they draw when idle, what kind of heat do they generate, what noise is produced from the fans running. Power consumption is actually another very important metric of mine, and I don't go out buying the latest & greatest for that reason. I wait a bit to see what comes out that might not use more electricity than my entire current system on full load. Bills to pay and all that.

This! Noise and power draw. On purpose, I never put more than a 500w PSU in my rigs and never overclock. Personnal choice, for similar reasons as yours. Above that, it's too pricy, imo. I believe that RTX will gain mass adoption when it will meet these requirements, also. Even on PS5/Xbox Series X, it's not all games that will make use of RTX.

"Among Us and Fall Guy with RTX!" Like caviar in Kraft dinner!

Feels like this discussion as lasted way too long.

You got your opinion, fine... Stick to it. Your point of view is your own and has as much value as any other from anyone here. It's all biased in some way by our own personnal experiences that differs from one person to another...

Do you want to have an open discussion or you just want to show us all that you are right? At this point, it's beginning to look like trolling.

Please, be on your way, there is nothing to see...

That is pretty much the question of support. You can try older drivers for older nvidia cards, but using older cards is pointless because they will just waste electricity and barely deliver any performance - that is why companies stop supporting them.

What... Producing new cards is a more waste of electricity than using older cards. In this domain, replacing the components is worse than using older ones. But I assume you care only about your electricity consumption, right?

But you assume that everybody just want to play the big recent games while maintaining great performance, in this case sure it's pointless to use old cards.

Using older cards is pointless for you, because you only seem to care about performance (and features, we know).

Gaming doesn't mean for everybody "AAA gaming", and it's particularly true for Linux users, as other said: we could just stick with Windows.

If someone is happy using an old card because it's just works fine, and one day Nvidia (or other) decide to not support it anymore, it's still an issue. This is a waste, throwing components that still works because people have to buy new ones is nonsense for me...

I read all the thread, and, lunix, what a guy you are.

At GOL we're used to see guys like you, from time to time.

What's the bottom line of all this Lunix? What are you trying to convince us?

Feels like this discussion as lasted way too long.

You got your opinion, fine... Stick to it. Your point of view is your own and has as much value as any other from anyone here. It's all biased in some way by our own personnal experiences that differs from one person to another...

Do you want to have an open discussion or you just want to show us all that you are right? At this point, it's beginning to look like trolling.

Please, be on your way, there is nothing to see...

You're overthinking it, I just wanted to warn people to carefully evaluate features because if they don't do that they can get burned and then a few months later they'll start to rant how they'll never buy anything from $gpu-company again. And features aren't going to develop themselves just because you buy another product. Nothing special, but it became political. All concerns are valid, the point is to be aware of them.

Agreed. And when you say "$gpu-company" we can add "from chipset to manufacturer". Not all AMD or Nvidia GPUs are born equal (MSI, ASUS, Gigabyte, Sapphire, Power Color, etc...) Making the difference between a manufacturer's bad "QA decision" and a chipset flaw is also important. Been there a couple of times.

Last edited by Mohandevir on 29 Oct 2020 at 4:00 pm UTC

I do have to admit personally, even as a typical NVIDIA fan that AMD are looking mighty tempting.

Join us

6900 XT or the 6800 XT - hmmm....

I do have to admit personally, even as a typical NVIDIA fan that AMD are looking mighty tempting.

Join us

6900 XT or the 6800 XT - hmmm....

Yeah same here. I'm actually quite happy with my GTX 1080, but due to VR I want to go for something more powerful soon. I don't mind that the Nvidia driver is closed source, I have to use dkms also for an out of tree gaming wheel driver. That said not having async reprojection* or being able to switch to wayland might be the reason to go for one of those cards.

Lets see how the actual launch and support looks like...

* I don't know if it is a hack or not, but it really does serves its purpose.

Last edited by jens on 29 Oct 2020 at 6:44 pm UTC

You are describing your ideology while claiming not to have one. Kind of underlines most of what I said.Every product is different and the ideology is the least important difference between them.This statement involves at least two major misconceptions. The first is about the nature of the word "important" as it relates to individuals' choices. Obviously, you cannot define for another person what is important to them. If their values are different from yours, what is important to them will also be different. People have different needs and so on. So for instance, if I'm buying a consumer product, it may be important to me that it be purple (note my handle). But I would not claim to you that purpleness is the most important feature of that consumer product and you are a fool making a mistake if you fail to get a purple one. I accept that, for whatever perverse reason, for many people purpleness just isn't that important. So saying ideology is, or is not, important to someone else's choice is in a basic sense a category error.

The other is about the nature and implications of ideology. An ideology is an understanding of how the world works, in a political and economic sense, combined with some values. If you have an ideology, inevitably it has implications about how the world should work--it might imply that the world should work exactly how it does work, although given how it does work that would be kind of a crappy ideology.

I say "if", but in fact everyone has an ideology. "Pragmatists" who imagine they do not have one are, in reality, just practising some received ideology they do not understand because they absorbed it without thinking about it. You might say instead of them having an ideology, it has them. Refusing to think through what your ideology is or, if you do, to take any actions derived from it, is basically a matter of passivity--accepting that you will be acted on, not an actor, and that the world will be the way all the people who do act cause it to be. Now that's OK in a way, but people who abdicate their agency that way shouldn't be getting on the case of people who have thought things through and do have positions.

Basically, if you haven't reached an understanding of the world or can't be bothered to act in ways consistent with it, shut up when those of us who do know what we're talking about and do have some consistency with our beliefs are talking.

There are various misconceptions in your comment:

1. When we are talking about buying products we shouldn't just look at that one specific thing as the deciding factor - especially when we want to build a gaming PC. This is what I was trying to tell to people but it seems like it fell on deaf ears. If you want a good purchase then you should be really careful what you buy because marketing exists and it is ready to bait you with illusions. Yes, people want different things but ultimately people want features and not vaporware or other illusions.

2. An ideology is nothing just a set of ideas - a dream. "No Tux No Bux", "Free Software for all", "The year of the linux desktop" - how are those working out? Back to square one: people want features which get things done. Ideologies might give you some drive and they might cripple your capabilities but nothing more. Linux rules the server space because of its capabilities. I thought that it is sensible to think that GamingOnLinux.com would focus on gaming but it seems like politics is more important in this community. But you can see that you can only get so far with your ideologies but without political power: linux gaming is pretty much on life support. Proton keeps it alive but for how long? Valve created linux gaming because they saw it as a not-windows-but-kinda-works alternative. Businesses evaluate business capabilities and then they invest. The FSF tries to promote "user freedom" with linux and other free software and yet there are many who say linux is not about choice - who is right and who is wrong? Or is it just another grey area? In the end, we depend on companies. Developers get paid to do things professionally because our charities are just small change.

3. No, not everyone has an ideology and operating through ideologies is not a rational thing to do. Ideologies are just restrictions: it's one thing to think you know how the world should work and another thing how it would be better. It's childish to assume that someone knows how to do things the best way. Yes, we might know how some things but in the end we should strive for what is the best for everyone and for that we need to carefully evaluate our decisions. Of course, if you just want to follow a set of conventions in which people already decided everything for you then you can do that too!

4. Telling people to "shut up" won't make them shut up or think differently - whether you like what they say or not. And it definitely won't make them listen to you, especially if you say absurd things like how you "reached an understanding of the world"

What's clear however is the position of the Linux project itself (i.e. kernel developers and maintainers). Linux is rooted in FOSS, and blob approach is not something that Linux as a project appreciates. It might be merely tolerated, but it's not a good thing. There is nothing to debate about that, and blob proponents like Nvidia know it well.

Last edited by Shmerl on 29 Oct 2020 at 6:25 pm UTC

Cool.

The only detail I'm currently interested in though is WHEN THE FECKING rdna1 PRICES WILL GO DOWN

On an entirely selfish level, hopefully not before I sell my Red Devil

Every product is different and the ideology is the least important difference between them.

For you. Not for me. The ethos of a company, its ethical stance, and its impact on fostering a cultural shift to open methodologies is the single most important aspect of choosing my hardware. Then it's performance, then it's heat/noise/efficiency. Finally, its price comes into consideration.

It's weird to me that you're commenting on a Linux site and don't understand this, or somewhat buy into it. But I suppose as Linux increases in popularity, there will be more and more people like yourself who don't care about open standards (or least, care as much as others do).

I'll buy a AMD card when they have working Linux drivers available at launch. I'm not waiting six months for the Mesa guy to get their new cards working when Nvidia has Linux drivers available for their new card on day one. I'm not supporting a company that treats my OS of choice like a second class citizens.

Quote meCool.

The only detail I'm currently interested in though is WHEN THE FECKING rdna1 PRICES WILL GO DOWN

On an entirely selfish level, hopefully not before I sell my Red Devil

How to set, change and reset your SteamOS / Steam Deck desktop sudo password

How to set, change and reset your SteamOS / Steam Deck desktop sudo password How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

How to set up Decky Loader on Steam Deck / SteamOS for easy plugins

See more from me