One day Google might catch a break with their cloud gaming service Stadia but it's not now and perhaps rightfully so in this case. There's a new proposed class action lawsuit filed by a New York resident over the streaming quality and display resolution on Stadia.

As pick up initially by ClassAction, the lawsuit doesn't just involve Google. They're taking aim at Bungie and id Software claiming they all mislead players about the expected resolution when getting people to pay upfront for the Founder's Edition and Premier Edition bundles that came with the Stadia Controller and a Chromecast Ultra.

The lawsuit was originally filed in October 2020, with it only recently being moved from Queens County Superior Court to the New York federal court on February 12 so it's all still ongoing and these things tend to take plenty of time.

The problem is with how it was all initially advertised, when Google went on to claim how Stadia was "more powerful than both Xbox One X and Playstation 4 Pro combined" according to the lawsuit and that "all of the video games on the Google Stadia platform would support 4k resolution at launch". Interestingly, the lawsuit seems to indicate that the free $10 / £10 that Google give away on Stadia was as a result of "months of settlement negotiations" which is the first I've heard of.

Not only that, the lawsuit alleges that customers were basically Beta testers prior to the launch of the free version of Stadia that anyone can now sign up for (with Stadia Pro now being optional).

As someone who picked up the Founder's Edition, I can definitely agree with it feeling like we were all Beta testing for a wider roll out, and clearly Google's advertisement and marketing was far too hyped up and full of hot air about the expected quality and resolution for Stadia games. It definitely doesn't help when Google's Phil Harrison, replied to people on Twitter to further hype up the quality:

Yes, all games at launch support 4K. We designed Stadia to enable 4K/60 (with appropriate TV and bandwidth). We want all games to play 4K/60 but sometimes for artistic reasons a game is 4K/30 so Stadia always streams at 4K/60 via 2x encode.

Now though, the service is actually pretty good but Google absolutely handled it poorly to begin with. Even now, quite a few games are still 30FPS even at 1080p which is not great and Google seriously need to do a better job of noting these things for each game which they currently do not.

It will be interesting to see what becomes of this lawsuit, if anything.

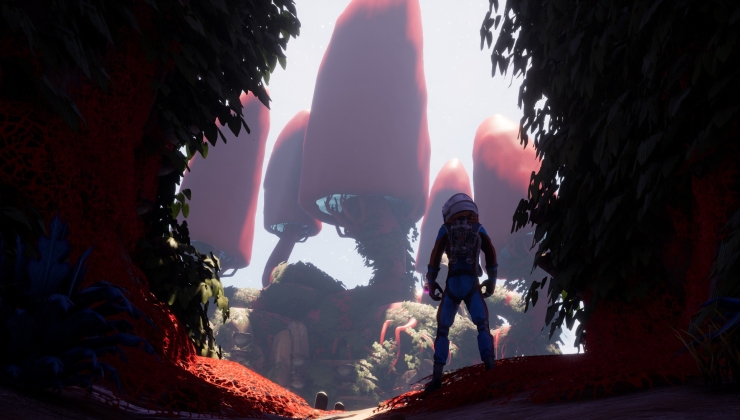

Additionally, Stadia is also currently under a bit of fire from users due to Journey To The Savage Planet from Typhoon Studios (who Google acquired and then shut down) being broken for some. Since Google let the developers go when they announced how they're no longer doing first-party games, it looks like they might not have anyone available currently to fix it. That's a bit of a disaster eh? Updated: they've now solved it after around 20 days.

Class action is no action, the only ones who profit are the lawyersIf it goes through, the target loses, though.

Sometimes, that is the best you can hope for.

All this while they were talking even about 8K. They really really need to stop this over-promise > under-deliver thing they're doing.

I don't personally care about 4K, (I think 1440p is the sweet spot) I care more about a minimum of 60fps. And I doubt is Stadia's choice here, (rather Bethesda's), but is ridiculous that (as stated in the article) Elder scrolls Online still runs at 30fps even cranked down at 720p.

All this while they were talking even about 8K. They really really need to stop this over-promise > under-deliver thing they're doing.

Yeah, seems like a heavy disconnect between marketing/sales and actual development team.

I don't personally care about 4K, (I think 1440p is the sweet spot) I care more about a minimum of 60fps. And I doubt is Stadia's choice here, (rather Bethesda's), but is ridiculous that (as stated in the article) Elder scrolls Online still runs at 30fps even cranked down at 720p.I was recently arguing back and forth with someone who clearly is of the 'PC Master Race' persuasion. I myself could be considered as such as well, but the older I get (as I told him) the more I lean toward wanting to play games on my TV with a controller. Mainly due to already spending far too much time on a keyboard / mouse working. And so I lean toward playing games on the PC with gamepad support.

All this while they were talking even about 8K. They really really need to stop this over-promise > under-deliver thing they're doing.

But he was insisting that the Atari VCS sucked because it couldn't handle 4k@120fps. Without also saying that 99% of PCs can't handle games at max settings at 4k@120fps either...

4k isn't really a thing unless you have the unobtanium current Gen GPUs, and certainly not at 120fps. Mind you I would MUCH prefer high details over high resolution. Unless you are strapping the screens to your face (in VR) then having that high of resolution is fine if you can already set maximum settings. I'd rather have more details / effects than tinier pixels. Streaming in general tends to make things blurry to try to increase framerates.

Class action is no action, the only ones who profit are the lawyersSo just to be clear on your perspective here, what would you recommend instead? No class action suits allowed? Rigorous regulation of corporations to make class action suits unnecessary? Some kind of state-administered pro-bono class action suits so those suing get the money?

I don't personally care about 4K, (I think 1440p is the sweet spot) I care more about a minimum of 60fps. And I doubt is Stadia's choice here, (rather Bethesda's), but is ridiculous that (as stated in the article) Elder scrolls Online still runs at 30fps even cranked down at 720p.I was recently arguing back and forth with someone who clearly is of the 'PC Master Race' persuasion. I myself could be considered as such as well, but the older I get (as I told him) the more I lean toward wanting to play games on my TV with a controller. Mainly due to already spending far too much time on a keyboard / mouse working. And so I lean toward playing games on the PC with gamepad support.

All this while they were talking even about 8K. They really really need to stop this over-promise > under-deliver thing they're doing.

But he was insisting that the Atari VCS sucked because it couldn't handle 4k@120fps. Without also saying that 99% of PCs can't handle games at max settings at 4k@120fps either...

4k isn't really a thing unless you have the unobtanium current Gen GPUs, and certainly not at 120fps. Mind you I would MUCH prefer high details over high resolution. Unless you are strapping the screens to your face (in VR) then having that high of resolution is fine if you can already set maximum settings. I'd rather have more details / effects than tinier pixels. Streaming in general tends to make things blurry to try to increase framerates.

4k is nice on a projector, but never really had the need on a PC screen ... And yes, unless you got latest gen hw, 4k/120fps is dead. Even if console makers pretended they could actually do it, they forget to say it is 120fps or 4k. or that it is with "dynamic resolution".

And yeah, some people are kinda absurd with their requirements.

Personnaly, I think 2K is becoming the sweet spot, and will be for the foreseeable future. You can actually get high framerate, with high setting and without spending 1K€ on a GPU + 300€ on a 850w power supply to accommodate it (supposing you can even find a new gpu ...).

I can’t wait for Stadia to die out since it’s one big circle jerk here. I get it... Stadia runs Linux but it’s closed off.It allows people to play more games on Linux, so we cover it. There's no circle jerk about it and to say so is pretty ridiculous. Do we need to go over this together again?

Don't like to read about a topic? Be an adult about it and don't click the link or if you really struggle to do so, add it to your list of blocked tags in your settings.

Last edited by Liam Dawe on 23 Feb 2021 at 5:52 pm UTC

I can’t wait for Stadia to die out since it’s one big circle jerk here.Seriously? I've heard reasonable arguments against Stadia, but yours is just silly.

Maybe if it was a big circle jerk a certain group of people would enjoy it more.I can’t wait for Stadia to die out since it’s one big circle jerk here.Seriously? I've heard reasonable arguments against Stadia, but yours is just silly.

;)

4k is nice on a projector, but never really had the need on a PC screen ... And yes, unless you got latest gen hw, 4k/120fps is dead. Even if console makers pretended they could actually do it, they forget to say it is 120fps or 4k. or that it is with "dynamic resolution".I remember watching a documentary about IMAX a few years back, where it showed them meticulously cleaning each frame of film by hand then carefully digitising it... at 2K. I'm not going to say 4K is snake oil - more resolution can't be worse (leaving aside compression issues, etc.), and I'd be surprised if IMAX wasn't using it, or even 8K, today - but it's way overkill for relatively small screens, at least with current technology.

And yeah, some people are kinda absurd with their requirements.

Personnaly, I think 2K is becoming the sweet spot, and will be for the foreseeable future. You can actually get high framerate, with high setting and without spending 1K€ on a GPU + 300€ on a 850w power supply to accommodate it (supposing you can even find a new gpu ...).

Mind you, my TV's still SD. Not, again, that I don't appreciate higher resolutions, but... meh, it's only TV.

I'm not going to say 4K is snake oil - more resolution can't be worse (leaving aside compression issues, etc.), and I'd be surprised if IMAX wasn't using it, or even 8K, today - but it's way overkill for relatively small screens, at least with current technology.

I'd say that the case is better for small screens than big ones. Watching soaps or a 20—foot face gurning emotively: meh. Having text that's clear with the letters the right shape, and not having chunky aliasing on edges in games, are the kinds of thing that makes a difference when you're close to a screen, which you're going to be for small screen use cases.

Mind you, my TV's still SD. Not, again, that I don't appreciate higher resolutions, but... meh, it's only TV.

I have an old CRT tv too for to play old Sega Genesis and Snes games via emulators.

I'm not going to say 4K is snake oil - more resolution can't be worse (leaving aside compression issues, etc.), and I'd be surprised if IMAX wasn't using it, or even 8K, today - but it's way overkill for relatively small screens, at least with current technology.

I'd say that the case is better for small screens than big ones. Watching soaps or a 20—foot face gurning emotively: meh. Having text that's clear with the letters the right shape, and not having chunky aliasing on edges in games, are the kinds of thing that makes a difference when you're close to a screen, which you're going to be for small screen use cases.

Hmmm depends how small, for a laptop, I tried both 1080p and 4k, and honestly except my battery draining faster I did not see any difference ...

For work screen, so 27 or 32" is more open to discussion, but I also stay at 2k there. Also because some tools I use have no correct support for high res, and link straight to X11 calls, so nothing a modern system can save you from (aren't shitty proprietary tools written at dinosaurs age wonderful ...). If not for those, I don't know because at 2k I can easily have 9 sub terminals with vim or tmux cmd line and no issue to read or anything, but yeah maybe I would gain a little from 4k.

For gaming, high framerate is more important than higher resolution honestly, so for me the choice is a no brainer, 2k all the way.

As for bigger projector screen, 1080p definitely gets blury in games. I am currently looking seriously at upgrading to 4k for that use. Issue is to find a 4k projector with decent latency.

Well, considering I connected a Genesis, C64 and Atari 8 bit up to my projector, I don't think going 4k would help :PI'm not going to say 4K is snake oil - more resolution can't be worse (leaving aside compression issues, etc.), and I'd be surprised if IMAX wasn't using it, or even 8K, today - but it's way overkill for relatively small screens, at least with current technology.

I'd say that the case is better for small screens than big ones. Watching soaps or a 20—foot face gurning emotively: meh. Having text that's clear with the letters the right shape, and not having chunky aliasing on edges in games, are the kinds of thing that makes a difference when you're close to a screen, which you're going to be for small screen use cases.

Hmmm depends how small, for a laptop, I tried both 1080p and 4k, and honestly except my battery draining faster I did not see any difference ...

For work screen, so 27 or 32" is more open to discussion, but I also stay at 2k there. Also because some tools I use have no correct support for high res, and link straight to X11 calls, so nothing a modern system can save you from (aren't shitty proprietary tools written at dinosaurs age wonderful ...). If not for those, I don't know because at 2k I can easily have 9 sub terminals with vim or tmux cmd line and no issue to read or anything, but yeah maybe I would gain a little from 4k.

For gaming, high framerate is more important than higher resolution honestly, so for me the choice is a no brainer, 2k all the way.

As for bigger projector screen, 1080p definitely gets blury in games. I am currently looking seriously at upgrading to 4k for that use. Issue is to find a 4k projector with decent latency.

My second monitor's a CRT, but my TV's actually from that very short window when they made SD LCDs.Mind you, my TV's still SD. Not, again, that I don't appreciate higher resolutions, but... meh, it's only TV.

I have an old CRT tv too for to play old Sega Genesis and Snes games via emulators.

Well, considering I connected a Genesis, C64 and Atari 8 bit up to my projector, I don't think going 4k would help :PI'm not going to say 4K is snake oil - more resolution can't be worse (leaving aside compression issues, etc.), and I'd be surprised if IMAX wasn't using it, or even 8K, today - but it's way overkill for relatively small screens, at least with current technology.

I'd say that the case is better for small screens than big ones. Watching soaps or a 20—foot face gurning emotively: meh. Having text that's clear with the letters the right shape, and not having chunky aliasing on edges in games, are the kinds of thing that makes a difference when you're close to a screen, which you're going to be for small screen use cases.

Hmmm depends how small, for a laptop, I tried both 1080p and 4k, and honestly except my battery draining faster I did not see any difference ...

For work screen, so 27 or 32" is more open to discussion, but I also stay at 2k there. Also because some tools I use have no correct support for high res, and link straight to X11 calls, so nothing a modern system can save you from (aren't shitty proprietary tools written at dinosaurs age wonderful ...). If not for those, I don't know because at 2k I can easily have 9 sub terminals with vim or tmux cmd line and no issue to read or anything, but yeah maybe I would gain a little from 4k.

For gaming, high framerate is more important than higher resolution honestly, so for me the choice is a no brainer, 2k all the way.

As for bigger projector screen, 1080p definitely gets blury in games. I am currently looking seriously at upgrading to 4k for that use. Issue is to find a 4k projector with decent latency.

Indeed :)

On the other hand, choosing a projector/tv which uses an adapted upscale algorithm could change the result dramatically. There was a nice LTT video about that: https://www.youtube.com/watch?v=bUCc5NGEthA , Of course if you use an external device for that, then no issue.

How to install GE-Proton on Steam Deck, SteamOS, Linux

How to install GE-Proton on Steam Deck, SteamOS, Linux

See more from me